Abstract

Decades of clinical and basic research in visual system development have shown that degraded or imbalanced visual inputs can induce a long-lasting visual impairment called amblyopia. In the auditory domain, it is well established that inducing a conductive hearing loss (CHL) in young laboratory animals is associated with a panoply of central auditory system irregularities, ranging from cellular morphology to behavior. Human auditory deprivation, in the form of otitis media (OM), is tremendously common in young children, yet the evidence linking a history of OM to long-lasting auditory processing impairments has been equivocal for decades. Here, we review the apparent discrepancies in the clinical and basic auditory literature and provide a meta-analysis to show that the evidence for human amblyaudia, the auditory analog of amblyopia, is considerably more compelling than is generally believed. We argue that a major cause for this discrepancy is the fact that most clinical studies attempt to link central auditory deficits to a history of middle ear pathology, when the primary risk factor for brain-based developmental impairments such as amblyopia and amblyaudia is whether the afferent sensory signal is degraded during critical periods of brain development. Accordingly, clinical studies that target the subset of children with a history of OM that is also accompanied by elevated hearing thresholds consistently identify perceptual and physiological deficits that can endure for years after peripheral hearing is audiometrically normal, in keeping with the animal studies on CHL. These studies suggest that infants with OM severe enough to cause degraded afferent signal transmission (e.g., CHL) are particularly at risk to develop lasting central auditory impairments. We propose some practical guidelines to identify at-risk infants and test for the positive expression of amblyaudia in older children.

Similar content being viewed by others

An introduction to otitis media and amblyaudia

Otitis media (OM) is a common childhood illness that is characterized by purulence and/or the accumulation of excessive mucin in the middle ear space. In some instances, the mechanical properties of the middle ear system can be altered by the presence of viscous fluid in the typically air-filled tympanum. In these cases, the auditory signal transmitted to the developing central nervous system is degraded, prompting many clinicians and researchers to hypothesize that OM may represent a common form of developmental auditory deprivation. Dating back to the seminal studies of Hubel and Wiesel in the 1960s and 1970s, developmental sensory deprivation has repeatedly been shown to have specific, long-lasting, and deleterious effects on brain and behavior. For example, decades of basic and clinical research have shown that improperly aligned visual signals transmitted from each eye to the brain during critical periods of central visual system development can result in a neurological disorder known as amblyopia, or “lazy eye”. However, establishing whether OM also induces persistent changes in brain physiology and perception in humans has proven more controversial. In this meta-analysis, we compile findings from decades of OM studies and argue that the key factor in the controversy can be explained by the fact that most clinical studies only account for the presence of OM and do not take the additional critical step of determining whether the OM also imposes an appreciable conductive hearing loss (CHL). The minority of clinical studies that have positively identified the subset of children with histories of OM and CHL offer a significantly clearer and more sobering view of the connection between this common childhood hearing disorder and its enduring pathophysiological and perceptual sequelae.

The strong interest in the prevention, treatment, and neurobiological sequelae of OM is in part attributable to its high prevalence in children. In fact, an estimated 80% of children will experience one or more bouts of OM before they reach 3 years of age, making it the most common cause for physician visits and medication prescriptions among children in the USA (Freid et al. 1998; Pennie 1998). Children can experience OM in one or both ears, although bilateral OM is slightly more common (Engel et al. 1999). Unilateral and bilateral bouts of otitis media with effusion (OME) persist for an average of 1 or 2 months, respectively (Hogan et al. 1997). A subset of children (30–40%) experience recurrent OME, characterized by OME episodes of typical lengths separated by unusually brief (10 weeks by contrast to the typical 31 weeks) effusion-free periods (1997). Because OME can occur without the painful symptoms that accompany infection, it may often go unnoticed by parents or caregivers without periodic otolaryngological and audiological assessments. Whether the diagnosis is acute OM, OME, or recurrent OME, its prevalence drops precipitously after 2 years of age and is rarely associated with signs of significant, lasting middle ear pathology (Gravel and Wallace 2000).

While the methods for diagnosing and treating OM are generally agreed upon by health care practitioners, there is less consensus concerning the urgency of treating this disease. Common procedures to treat OM include insertion of tympanostomy tubes, prescription of oral antibiotics, and adenoid removal. As all interventions carry some level of risk, particularly with young children, debate is focused on the benefits of alleviating OM versus waiting for it to resolve spontaneously. This is particularly true in the case of OME, where the presence of middle ear effusion is not necessarily accompanied by infection or discomfort. At the crux of this controversy lies the question of whether childhood OM is associated with abnormal brainstem physiology and deficits in spatial hearing as well as receptive language skills that persist for years after the middle ear pathology has resolved, a cluster of neurological seqeulae we refer to here as amblyaudia (amblyos—blunt; audia—hearing). Should amblyaudia be acknowledged as a legitimate risk factor when OM is left untreated, it could shift the cost-benefit analysis towards rapid intervention. On the other hand, should the link to auditory processing disorders be convincingly disproven, the decision to intervene versus “watchful waiting” could be solely based upon the peripheral symptoms. Although over 40 years have passed since Holm and Kunze (1969) first reported deficiencies in multiple speech, language and auditory tasks in a small sample of children with a history of OM, a definitive answer regarding the connection between OM and amblyaudia has yet to be firmly established. To understand why the perceptual and pathophysiological sequelae of OM have proven so controversial, it is principally important to understand the theoretical relationship between OM, hearing loss, and the maturation of auditory processing.

Although OM is physically restricted to the middle ear space, it can interfere with the transmission of acoustic signals to the inner ear and, by extension, the entire auditory system. The middle ear pathology and accumulation of excess, viscous mucin that typically accompany OM can disrupt the acoustico-mechanical properties of the middle ear system, producing a CHL (Gravel and Wallace 2000; Ravicz et al. 2004). The CHL that accompanies OM fluctuates between 0 and 40 dB HL, is relatively uniform across frequencies and is considered to be reversible in that hearing sensitivity returns to normal following resolution of OM (Kokko 1974; Gravel et al. 2006). In addition to attenuating the overall amplitude of the acoustic signal, viscous fluid in the middle ear space can also delay transmission of the transduced waveform (Hartley and Moore 2003). Differences in the timing (interaural time difference) and amplitudes (interaural level difference) of acoustic signals arriving at the two ears play an essential role in spatial hearing, particularly in the horizontal plane. To illustrate the effects of OM-mediated conductive hearing loss on spatial hearing, we can make approximations of azimuthal shifts relative to midline by using the abnormal interaural time and level differences resultant from OM. Peak latencies for the click-evoked auditory brainstem response have been shown to be delayed by 0.44 ms on average and thresholds increased by approximately 20 dB in young children with middle ear effusion compared with audiometrically normal controls (Owen et al. 1993). Though ostensibly small, introducing a 0.44 ms interaural time difference (ITD), in cases of unilateral or asymmetrical OM, translates to a 45° shift in azimuthal position relative to midline based on the expected skull radius of a 6–12 months old infant (7.16 cm based on average growth charts of head circumference, Center for Disease Control 2000) using the following formula: \(\tau = 3\alpha \,\sin \,\theta \,c\), where τ is the ITD, α is the radius of the head, θ is the azimuth of the sound source, and c is the speed of sound in air (∼345 m/s) at standard temperature and pressure. More dramatically, a 20 dB interaural level difference induced by OM translates to a 90° shift in azimuthal position relative to midline for higher frequency acoustic components (Feddersen et al. 1957). Even larger interaural time and level difference distortions than those reported here for humans with middle ear effusions have been observed secondary to ear canal blocking in animal models (Lupo et al. 2011). It is not clear how often binaural timing and level difference cues conflict in children during bouts of OM or what effects such interaural cue conflicts might have on spatial hearing. Nevertheless, it is clear that the combined effect of conductive hearing loss paired with a loss of temporal fidelity and disorganized binaural cues can substantially degrade the quality of afferent signals transmitted to brain areas that represent and shape our perceptions of the auditory world.

Critically, degraded afferent signaling does not always accompany OM. In fact, in a large sample of prospectively followed infants (≤1 year) diagnosed with OM, only 15% demonstrated hearing thresolds greater than 25 dB HL, while just 6% had threshold shifts that exceeded 35 dB HL (Gravel and Wallace 2000). The resilience of hearing sensitivity in the presence of middle ear effusion can be attributed to the transmission properties of the middle ear, which are relatively unaffected by large, yet incomplete, reductions in tympanum volume. Animal and human temporal bone studies have shown that filling the middle ear with saline or even high viscosity oil has a negligible effect on middle ear transmission until the tympanum is completely full (Ravicz et al. 2004; Qin et al. 2010). Even small bubbles in the fluid introduce a sufficient volume in the middle ear space to improve mechanical transmission. Therefore, while the presence of middle ear effusion will likely produce an abnormal tympanogram (a middle ear transmission metric often used to diagnose OM), the quality of the afferent signal transmitted to the brain may be unaffected. However, this should not lessen the urgency of developing appropriate methods for diagnosing, treating and testing these children. Based on the latest census data, the US population under 5 years of age is 21.3 million (www.census.gov). Given the previously stated estimates that of the 80% of children who experience OM, 15% will have hearing thresholds >25 dBHL, the number of at-risk children under 5 years of age is 2.6 million (12% of that age-group). The prevalence of amblyopia, by contrast, is estimated at 2.1% of preschool and school-age children (Webber and Wood 2005).

Basic research studies linking developmental auditory deprivation and central auditory plasticity

As stated above, the central thesis of this review paper is that the primary risk factor for abnormalities in brain physiology, auditory perception, and speech receptivity that can accompany OM in childhood is not the presence of the disease state itself but the degradation of the afferent signal that can arise from OM. This hypothesis is supported by thousands of studies that characterize the development of higher (i.e., midbrain, thalamic, and cortical) sensory brain areas and describe significant alterations in the response properties of individual neurons, as well as their coordinated arrangement into functional circuits and topographic maps, based upon the presence and quality of sensory-evoked afferent input (for recent auditory clinical and basic auditory reviews, the reader is referred to Kral et al. 2005; Sharma et al. 2006; Dahmen and King 2007; Keuroghlian and Knudsen 2007; Sanes and Bao 2009). The influence of sensory-evoked activity patterns on the formation of functional brain circuits is not expressed equally across development. Rather, afferent input patterns have the strongest instructive influence on neural response properties during finite “critical periods” of postnatal development, when those circuits are first forming. Although critical periods are generally conceptualized as adaptive features through which neural circuits are shaped to efficiently represent the particular characteristics of an individual’s sensory milieu, brain plasticity mechanisms are agnostic as to whether these instructive sensory signals are “good” or “bad” (i.e., normal or degraded). In the event that the presence of OM creates weak and temporally degraded sound-evoked activity patterns during critical periods of brain development, the adverse effects of these degraded signals on the formation of neural circuits that mediate perception and behavior could persist long after the middle ear pathology has resolved. Because a degraded afferent signal would only be expected in a minority of children that test positive for OM, there is no reason to expect a strong overall correlation between OM and central processing deficits. Specifically, we hypothesize that amblyaudia will only be observed if (1) the diagnosis of OM is accompanied by a positive indication of degraded auditory signal transmission and (2) the experience of degraded auditory signals overlaps extensively with critical periods of development for higher auditory brain regions. In the following sections, we review the basic and clinical literature germane to this hypothesis.

Studies of auditory brain development in laboratory animals strongly support the link between degraded afferent signals, critical periods of development and long-lasting deficits in brain physiology and perception. The most commonly used approach in animal studies involves physically blocking or interfering with the sound transmission mechanisms of the external ear or middle ear (often by plugging or ligating the external auditory meatus or extirpating the malleus) rather than creating an infection in the middle ear space. Using these methods, investigators are able to introduce a moderate-to-severe CHL at various stages of postnatal development that approximates the degraded auditory transmission that can accompany OM.

The effects of CHL during early development are evident even at the level of cellular morphology within the first- and second-order auditory brainstem nuclei. For example, chronic external ear blockage is associated with significantly reduced cell body diameter and dendritic arborization in corresponding regions of the cochlear nucleus and superior olivary complex (Webster and Webster 1977; Coleman and Oconnor 1979; Webster and Webster 1979; Conlee and Parks 1981, 1983). These effects are particularly striking in nucleus laminaris, an avian brainstem nucleus analogous to the medial superior olive in mammals. Nucleus laminaris features an array of bipolar neurons whose ventral and dorsal dendrites are exclusively contacted by afferents from the contralateral and ipsilateral ear, respectively. Chicks reared with unilateral ear plugs show profound, frequency-specific disruption to the normally symmetric dendritic fields in nucleus laminaris neurons, such that dendrites projecting from neurons with high characteristics frequencies are smaller on both sides of the brain, yet only on dorsal or ventral aspects corresponding to inputs from the deprived ear. The opposite proved true for neurons with low characteristic frequencies (Gray et al. 1982; Smith et al. 1983). These frequency-specific effects occurred despite the presence of a presumably flat spectrum hearing loss, and might be attributed to the enhancement of low-frequency bone-conducted stimuli and internal noise that occurs secondary to occlusion of the external auditory canal, resulting in frequency-specific differential activity impinging on nucleus laminaris neurons. In addition to alterations in cellular morphology, investigators have also reported that CHL is associated with reduced levels of protein synthesis, cellular metabolism and synaptic activity throughout the central auditory pathways. The functional silencing observed with these activity-dependent measures is often expressed at a similar level to that observed with complete deafferentation through cochlear ablation (Tucci et al. 1999; Hutson et al. 2007; Hutson et al. 2008).

CHL has also been found to disrupt temporal response properties of auditory cortex neurons. For example, recording from auditory cortex neurons in the acute thalamocortical brain slice of normally hearing rodents reveals an abrupt decline in the amount of inhibitory and excitatory synaptic depression shortly after the onset of hearing. By contrast, levels of synaptic depression measured after the onset of hearing in animals with bilateral CHL appears similar to pre-hearing levels (Xu et al. 2007; Takesian et al. 2010). These finding demonstrate that CHL can interfere with adaptation rates to temporally modulated synaptic inputs within the auditory cortex. Moreover, because these recordings were obtained in isolated brain slices, they explicitly show that plasticity in cortical response properties can arise locally and are not solely inherited from ongoing alterations in peripheral or brainstem response properties.

While these studies undeniably relate the ongoing presence of CHL to pathology in auditory brain nuclei, they are better suited as animal models for brain plasticity that may occur during active bouts of OM, rather than the enduring amblyaudia that may stem from a history of OM in childhood. The neurobiological basis for amblyaudia can only be addressed through studies that characterize the structural and functional properties of auditory brain circuits in animals that have documented histories of degraded auditory transmission but normal hearing sensitivity at the time of testing. Far fewer studies have explored the effect of temporary auditory deprivation on brain function or behavior, but those that have examined this relationship arrived at equally unambiguous conclusions regarding the maladaptive effects of degraded auditory transmission on neural organization.

In these studies, unilateral CHL is introduced by surgically ligating or plugging the external meatus at an early age and then removing the obstruction months later, prior to making neurophysiological recordings from neurons in the auditory midbrain or cortex. By and large, these studies find that the normal binaural balance between the representation of sounds delivered to each ear is disrupted, despite the fact that the developmentally deprived ear is audiometrically normal at the time of testing (Clopton and Silverman 1977; Silverman and Clopton 1977; Moore and Irvine 1981; Popescu and Polley 2010). Unit discharge rates evoked by stimuli presented to the developmentally nondeprived ear were augmented, while those to the deprived ear were suppressed, effectively reweighting the contralateral response bias and interfering with the precisely calibrated tuning for interaural level difference cues that play an essential role in binaural hearing. Importantly, many of these plasticity effects are limited to critical periods early in postnatal development, as the effects of reversible unilateral blockade are substantially reduced when performed in mature animals (Clopton and Silverman 1977; Popescu and Polley 2010).

Not surprisingly, behavioral studies in animals that have undergone temporary CHL have found persistent abnormalities in azimuthal sound localization accuracy, even though hearing sensitivity was equivalent in both ears at the time of testing, suggesting a clear behavioral parallel to the physiological plasticity described above (Clements and Kelly 1978; Knudsen et al. 1984a). Sound localization deficits can last for months, depending on when, during postnatal development, the ear canal is blocked (Knudsen et al. 1984b). More recent investigations in ferret have shown that intensive perceptual training can restore localization accuracy following unilateral auditory deprivation and that this plasticity relies critically on the function of descending corticocollicullar connections, highlighting the potential importance of auditory cortex circuits in the expression of amblyaudia (Kacelnik et al. 2006; Bajo et al. 2010; Nodal et al. 2010).

Comparing the basic and clinical literature on otitis media and amblyaudia

If basic research studies find such a clear effect of auditory deprivation on brain and behavior, why are the data linking childhood OM to amblyaudia so equivocal? We propose that this discrepancy can be traced to four principal causes:

First, animal models of OM typically employ continuous monaural or binaural deprivation for weeks or months. In contrast, a single bout of OM is expected to last approximately 4 to 6 weeks, followed by up to 60 OM-free weeks, depending on disease severity. Moreover, levels of CHL reported in animal studies typically range from 20 to 50 dB, whereas CHL stemming from OM in humans can be substantially milder. Additionally, while the presence of amblyaudia is often probed immediately following reinstatement of hearing in animal models, years of “typical” auditory experience often precede probes of amblyaudia in human studies. In other words, animal studies could be said to model the worst-case scenario of auditory signal degradation associated with OM. Clearly, data from animal studies that utilize more realistic levels and durations of auditory deprivation would be of considerable interest.

Second, basic research studies uniformly point toward the paramount importance of developmental age when assessing the impact of auditory deprivation on brain and behavior. In many of the studies cited above, the same manipulation that profoundly affected neural representations of auditory stimuli and perceptual acuity in young animals had no effect when performed at later ages. The central importance of developmental critical periods for normal auditory perception is firmly established in the context of language development and even in the efficacy of cochlear implants in congenitally deaf children (Dorman et al. 2007; Kuhl 2010). However, studies linking OM to amblyaudia often do not take the timing of OM episodes into account, nor is it known which brain areas are most adversely affected by OM and when, during development, their organization is most susceptible to the structure of afferent activity patterns.

Third, while animal models of OM have probed anatomy, physiology and binaural perception of their subjects, most human studies have assessed speech, language, behavioral, and academic skills of children with histories of OM. These developmental markers may have the greatest external validity to health care providers, educators, and policy makers but are the most challenging to understand in terms of their underlying neural substrates. Instead, psychophysical assessments that relate to established neural pathways for binaural signal representations may prove more valuable in the short-term when addressing amblyaudia.

Finally, and perhaps most importantly, the independent variables in the animal and human studies are very often different. Animal studies that describe striking changes in brain and behavior often bypass the middle ear effusion altogether and directly degrade afferent signal quality by disrupting the sound transmission mechanisms of the middle or outer ear. Human studies, by and large, relate amblyaudia (or lack thereof) to the presence of OM. As described earlier, significant degradation of the afferent signal (CHL of >25 dB HL) would only be expected in <15% of children diagnosed with OM, suggesting that subjects in the OM-positive group, from whom amblyaudia would be expected, are substantially intermingled with—or even outnumbered by—subjects who would not be expected to present with amblyaudia.

This last point could be addressed experimentally in human studies that longitudinally characterize the auditory afferent signal quality in the form of elevated hearing thresholds at multiple points during infant development. While hearing threshold is just one of many potential metrics that index the quality of the afferent signal reaching the brain (albeit not even the most direct), it still offers a far more direct correlate than a positive diagnosis for OM alone. Indeed, when the question “Is early OM associated with amblyaudia?” is rephrased as “Is early OM that also causes CHL associated with amblyaudia?” the answer becomes much less equivocal. Figures 1A and B illustrate that while prospective longitudinal and randomized control studies that attempt to relate OM to amblyaudia have equivocal outcomes, the available data suggest that a history of CHL during childhood OM is clearly and deleteriously associated with auditory pathophysiology and deficits in binaural listening as well as receptive language skills. Overall, 65% of all study samples reported symptoms of amblyaudia following a history of childhood OM. Further analysis revealed that while only 53% of study samples show amblyaudia as a sequela of OM, 89% of studies reported amblyaudia as a consequence of OM occurring along with CHL. Tables 1 and 2 expand the simplified data profiles in Figure 1 to include sample sizes, ages of participants and metrics of amblyaudia employed in studies listed.

Early, degraded afferent signal quality (ASQ) is clearly associated with amblyaudia while otitis media (OM) is ambiguously related. Prospective studies which have investigated the relationship between OM or OM-associated degraded ASQ and amblyaudia are plotted in (A) and (B), respectively. Each number represents a cohort of participants (multiple research reports in some cases). A study was considered to relate ASQ to amblyaudia if hearing sensitivity was assessed repeatedly (three or more times during year 1 and/or two of life), and the investigator attempted to associate ASQ with amblyaudia. White squares represent studies which have used language, speech, reading or academic outcome measures. Blue squares represent studies which have employed direct auditory perceptual or physiologic testing as outcome measures. The corresponding studies for each symbol are indicated by the number in the center of the symbol which references the research articles in the key below.

Delays in the maturation of neural circuitry that subserve speech comprehension represent one of the longest-standing and most contentious central sequela associated with childhood OM. Purported delays in receptive language need not be independent of irregularities in perceptual thresholds as difficulties associated with listening in complex auditory environments (such as a classroom) could also be expected to interfere with classroom learning and continued refinement of speech and language skills. Two recent reviews have concluded that while a history of OM is associated with auditory processing and speech perception deficits, convincing evidence of deficits in speech production, receptive and expressive language, as well as academic achievement do not currently exist (Roberts et al. 2004a; Jung et al. 2005). These sentiments were echoed in a recent meta-analysis of prospective and randomized control trials that have examined the relation between (1) OM and language development as well as (2) OM-mediated CHL and language development (Roberts et al. 2004b). Consistent with the central hypothesis we advance here, these reviews also concluded that in most studies, hearing is often not assessed regularly enough (if at all) to investigate the role of CHL in later development, despite the fact that it is hearing loss and not the presence of excessive mucin in the middle ear space that may underlie abnormal imprinting of speech patterns and later developmental deficits. In fact, Roberts and colleagues (2004b) identified only three studies that met the authors’ criteria and attempted to correlate transient conductive hearing loss with general outcome measures of language between the years of 1966 and 2002 (studies numbered 9, 18, and 19 in Fig. 1). Again, looking only at studies that relate poor auditory processing and language skills to a history of hearing loss and OM rather than OM alone, the evidence unambiguously shows that early CHL increases a child’s risk for abnormalities in brainstem physiology, binaural hearing and receptive language skills (Fig. 1B and Table 2).

Returning to the third discrepancy between the animal and human literature, abnormal physiology of the auditory brainstem has consistently been observed in children with chronic OM and CHL. Multiple studies have shown that years after the resolution of CHL and OM, absolute and interpeak wave latencies of the auditory brainstem response are abnormally delayed, potentially suggesting immaturity in neural conduction (Folsom et al. 1983; Anteby et al. 1986; Gunnarson and Finitzo 1991; Hall and Grose 1993; Ferguson et al. 1998; Gravel et al. 2006). In addition, elevation of the brainstem mediated contralateral acoustic stapedial reflex threshold is also observed in children with a history of OM and CHL, further suggesting persistent dysfunction of the neuronal circuitry within the auditory brainstem (Gravel et al. 2006).

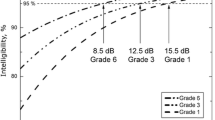

The link between OM and amblyaudia has also been made more explicit in a subset of studies through the use of carefully controlled psychophysical tasks that map onto known principles of binaural stimulus representations in the brain, rather than tests of language or scholastic ability. The masking level difference (MLD) is a perceptual test that affords researchers with an objective and parametric assessment of perceptual acuity that also relates to the real-world experience of hearing in noisy environments, such as classrooms or gymnasiums, by measuring a participant’s sensitivity to interaural time and amplitude cues. This test has been widely employed in studies that have prospectively and periodically documented CHL in conjunction with OM or retrospectively examined children with histories of chronic OM and reported CHL. Together, these studies have consistently shown disrupted binaural processing for years following reinstatement of hearing through either spontaneous resolution of effusion or placement of tympanostomy tubes (Moore et al. 1991; Pillsbury et al. 1991; Hall et al. 1995; Gravel et al. 1996; Hogan and Moore 2003). The same effect on MLD thresholds has been obtained in ferrets reared with unilateral earplugs, providing a direct link between the animal and human literature on developmental auditory deprivation (Moore et al. 1999).

In addition to binaural unmasking (MLD), children with histories of OM and reversible CHL have also been shown to localize sound sources less accurately and demonstrate deficits in monaural spectrotemporal processing (Besing and Koehnke 1995; Hall and Grose 1994; Hall et al. 1998). Similar decrements in binaural processing have also been demonstrated in children with reversible CHL secondary to aural atresia that has been surgically corrected (Gray et al. 2009; Wilmington et al. 1994). These shared abnormalities in perceptual outcomes of early reversible CHL suggest a causative role of developmental auditory deprivation in later perception regardless of the specific mechanisms that underlie the conductive hearing loss.

On a final note, even if amblyaudia is a legitimate sequela for untreated OM, the specific pathophysiological and perceptual sequelae are unlikely to persist past adolescence. While the precise time-course with which amblyaudia “normalizes” has yet to be delineated, it is clear that these deficits largely disappear after a slow recovery following a few years of typical auditory experience (Hall et al. 1995). Similarly, delays in language skills have also been reported to be transient in these children, with early testing demonstrating delays while later scores are normal (Maw et al. 1999). However, we would argue that impermanence does not suggest a developmentally trivial role for this sensory disorder. Due to the cumulative nature of childhood development, even transient perceptual and language delays are expected to feed forward to later behavior and academic achievement. In other words, even if the amblyaudia resolves by late childhood, the linguistic, cognitive and social functions coming online at these ages would be deleteriously affected by the absence of actionable auditory inputs. This raises the possibility that a transient auditory disorder could have “ripple effects” that extend into adolescence and beyond.

Practical considerations for clinical studies linking om to amblyaudia

If the quality of the afferent signal impinging upon key brain areas during bouts of OM is the principal determinant of amblyaudia, how is this degraded signal best measured? The most direct measurement would be to characterize the spatiotemporally distributed patterns of spiking activity in the auditory nerve and brainstem. As this is clearly impossible in humans, what available method most closely approximates the afferent signal?

Behavioral tests that derive hearing threshold (and, by extension, establish conductive hearing loss) are clearly more effective in predicting amblyaudia than the presence of the disease state alone, and therefore, may serve as an acceptable proxy for afferent signal quality. On the other hand, physiological and bioacoustic markers such as otoacoustic emissions, the auditory brainstem response and even measurement of event related potentials from the brain may ultimately prove to be the preferred indicators of amblyaudia as they are objective by definition and relate directly to various sources of auditory transduction and neural signal propagation. Ideally, the techniques used to assess afferent signal quality in studies of OM could be quickly executed in the clinical environment and would provide a reliable and objective characterization of the afferent signal. No single technique currently fulfills all of these requirements. For instance, hearing tests (i.e., audiometry) are time-consuming, subjective, and are typically performed in a free field, as headphone placement is often considered impractical at ages when OM is most common due to the inability or unwillingness of the child to perform the task under headphones. Thus, hearing thresholds measured in a free field may primarily indicate the sensitivity to stimuli presented to the “better ear”, making detection of CHL associated with unilateral or asymmetric OM difficult to pinpoint. Auditory brainstem response testing would most clearly and objectively characterize afferent signal quality in terms of latency and amplitude of synchronous auditory nerve and brainstem neural firing; however, this technique is time-consuming and can necessitate sedation in young children. By contrast, tympanometric testing is rapid, objective, ear specific, and fairly sensitive to disruption of middle ear transmission from CHL but is typically performed at a single frequency (226 Hz) and does not directly assess the magnitude or phase of the afferent signal. Table 3 lists some of the advantages and disadvantages associated with clinically available diagnostic techniques to track the afferent signal quality of children with OM. Future studies that compare these various metrics in OM-positive children will be essential to reach a more complete understanding of amblyaudia.

Also of interest are the outcome measures used to diagnose amblyaudia. As described earlier, metrics that assess language and academic skills have predominated OM studies. While these measures are, understandably, of great interest to caregivers and clinicians, they are also susceptible to variables such as cognitive ability and family environment that can introduce a significant amount of variance into the diagnosis and analysis of treatment efficacy. Assessing the binaural listening skills or brain physiology of children with histories of OM-associated CHL offers a direct means to more accurately and reliably diagnose the presence of amblyaudia. The advantages and disadvantages of some outcome measures used to characterize amblyaudia are listed in Table 4.

Overall, physiologic and perceptual testing in animal models as well as humans suggests that the connection between OM-associated degradation of afferent signal quality and subsequent neurological impairment is substantially clearer than generally believed. These data further inform two practical considerations for management of, or research involving, children with chronic OM. (1) The afferent signal quality of children with OM should be assessed longitudinally to accurately characterize the nature and timing of their sensory deprivation, with detection of degraded afferent input shifting the cost-benefit analysis towards more aggressive treatment. This suggestion agrees with the most recent recommendations of the American Academy of Pediatrics (2004). (2) However, while the current clinical practice guidelines state that hearing status be based on the sensitivity of the child’s better hearing ear, animal models of amblyaudia have repeatedly shown that asymmetric, degraded afferent input results in profound alterations in the form and function of binaural circuits and dichotic listening tasks. Therefore, we suggest that afferent signal quality in the “worse” ear should also be considered in the cost-benefit analysis of treatment.

It is also likely that the timing of deprivation plays a key role in the specific nature of amblyaudia, although this matter is not yet completely elucidated in the animal literature, much less in human studies. The nature of morphological and physiologic changes in the central auditory system that result from well-controlled, developmentally degraded afferent signal quality are only beginning to be characterized in animal models. Some of the particularly important questions to be addressed in animal studies are whether the central nervous system completely normalizes following reinstatement of hearing and over what time-course such recovery might occur. Ongoing studies will relate these physiologic abnormalities to perception and may inform targeted neuroplasticity treatments, which could ultimately be translated to the clinical population.

References

American Academy of Family Physicians, American Academy of Otolaryngology-Head and Neck Surgery, American Academy of Pediatrics Subcommittee on Otitis Media with Effusion (2004) Clinical practice guideline: otitis media with effusion. Pediatrics 113:1412–1429

Anteby I, Hafner H, Pratt H, Uri N (1986) Auditory brain-stem evoked-potentials in evaluating the central effects if middle-ear effusion. Int J Pediatr Otorhinolaryngol 12:1–11

Bajo VM, Nodal FR, Moore DR, King AJ (2010) The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nat Neurosci 13:253–U146

Besing JM, Koehnke J (1995) A test of virtual auditory localization. Ear Hear 16:220–229

Center for Disease Control (2000) Birth to 36 months: boys head circumference-for-age and weight-for-length percentiles. Available at: http://www.cdc.gov/growthcharts/clinical_charts.htm#Set1. Accessed 7 February 2011.

Clements M, Kelly JB (1978) Auditory spatial responses of young guinea-pigs (cavia porcellus) during and after ear blocking. J Comp Physiol Psychol 92:34–44

Clopton BM, Silverman MS (1977) Plasticity of binaural interaction.2. Critical period and changes in midline response. J Neurophysiol 40:1275–1280

Coleman JR, Oconnor P (1979) Effects of monaural and binaural sound deprivation on cell-development in the anteroventral cochlear nucleus of rats. Exp Neurol 64:553–566

Conlee JW, Parks TN (1981) Age-dependent and position-dependent effects of monaural acoustic deprivation in nucleus magnocellularis of the chicken. J Comp Neurol 202:373–384

Conlee JW, Parks TN (1983) Late appearance and deprivation-sensitive growth of permanent dendrites in the avian cochlear nucleus (nuc magnocellularis). J Comp Neurol 217:216–226

Dahmen JC, King AJ (2007) Learning to hear: plasticity of auditory cortical processing. Curr Opin Neurobiol 17:456–464

Dorman MF, Sharma A, Gilley P, Martin K, Roland P (2007) Central auditory development: evidence from CAEP measurements in children fit with cochlear implants. J Commun Disord 40:284–294

Engel J, Anteunis L, Volovics A, Hendriks J, Marres E (1999) Prevalence rates of otitis media with effusion from 0 to 2 years of age: healthy-born versus high-risk-born infants. Int J Pediatr Otorhinolaryngol 47:243–251

Feddersen WE, Sandel TT, Teas DC, Jeffress LA (1957) Localization of high-frequency tones. J Acoust Soc Am 29:988–991

Feldman HM, Dollaghan CA, Campbell TF, Colborn DK, Kurs-Lasky M, Janosky JE, Paradise JL (1999) Parent-reported language and communication skills at one and two years of age in relation to otitis media in the first two years of life. Pediatrics 104:e52

Ferguson MO, Cook RD, Hall JW, Grose JH, Pillsbury HC (1998) Chronic conductive hearing loss in adults—effects on the auditory brainstem response and masking-level difference. Arch Otolaryngol Head Neck Surg 124:678–685

Folsom RC, Weber BA, Thompson G (1983) Auditory brain-stem responses in children with early recurrent middle-ear disease. Ann Otol Rhinol Laryngol 92:249–253

Freid VM, Muckuc DM, Rooks RN (1998) Ambulatory health care visits by children: principal diagnosis and place of visit. Vital Health Statistics 13:1–23

Friel-Patti S, Finitzo T (1990) Language learning in a prospective study of otitis media with effusion in the first two years of life. J Speech Hear Res 33:188–194

Gordon KA, Tanaka S, Papsin BC (2005) Atypical cortical responses underlie poor speech perception in children using cochlear implants. Neuroreport 16:2041–2045

Gravel JS, Wallace IF (1992) Listening and language at 4 years of age—effects of early otitis-media. J Speech Hear Res 35:588–595

Gravel JS, Wallace IF (2000) Effects of otitis media with effusion on hearing in the first 3 years of life. J Speech Lang Hear Res 43:631–644

Gravel JS, Wallace IF, Ruben RJ (1996) Auditory consequences of early mild hearing loss associated with otitis media. Acta Oto-Laryngologica 116:219–221

Gravel JS, Roberts JE, Roush J, Grose J, Besing J, Burchinal M, Neebe E, Wallace IF, Zeisel S (2006) Early otitis media with effusion, hearing loss, and auditory processes at school age. Ear Hear 27:353–368

Gray L, Smith Z, Rubel EW (1982) Developmental and experiential changes in dendritic symmetry in n-laminaris of the chick. Brain Res 244:360–364

Gray L, Kesser B, Cole E (2009) Understanding speech in noise after correction of congenital unilateral aural atresia: effects of age in the emergence of binaural squelch but not in use of head-shadow. Int J Pediatr Otorhinolaryngol 73:1281–1287

Gunnarson AD, Finitzo T (1991) Conductive hearing-loss during infancy—effects on later auditory brain-stem electrophysiology. J Speech Hear Res 34:1207–1215

Hall JW, Grose JH (1993) The effect of otitis-media with effusion on the masking-level difference and the auditory brain-stem response. J Speech Hear Res 36:210–217

Hall JW, Grose JH (1994) Effect of otitis-media with effusion on comodulation masking release in children. J Speech Hear Res 37:1441–1449

Hall JW, Grose JH, Pillsbury HC (1995) Long-term effects of chronic otitis-media on binaural hearing in children. Arch Otolaryngol Head Neck Surg 121:847–852

Hall JW, Grose JH, Dev MB, Drake AF, Pillsbury HC (1998) The effect of otitis media with effusion on complex masking tasks in children. Arch Otolaryngol Head Neck Surg 124:892–896

Hall AJ, Maw AR, Steer CD (2009) Developmental outcomes in early compared with delayed surgery for glue ear up to age 7 years: a randomised controlled trial. Clin Otolaryngol 34:12–20

Hartley DEH, Moore DR (2003) Effects of conductive hearing loss on temporal aspects of sound transmission through the ear. Hear Res 177:53–60

Hartley DEH, Moore DR (2005) Effects of otitis media with effusion on auditory temporal resolution. Int J Pediatr Otorhinolaryngol 69:757–769

Hogan SCM, Moore DR (2003) Impaired binaural hearing in children produced by a threshold level of middle ear disease. JARO-Journal of the Association for Research in Otolaryngology 4:123–129

Hogan SC, Stratford KJ, Moore DR (1997) Duration and recurrence of otitis media with effusion in children from birth to 3 years: prospective study using monthly otoscopy and tympanometry. Br Med J 314:350–353

Holm VA, Kunze LH (1969) Effect of chronic otitis media on language and speech development. Pediatrics 43:833

Hutchings ME, Meyer SE, Moore DR (1992) Binaural masking level differences in infants with and without otitis-media with effusion. Hear Res 63:71–78

Hutson KA, Durham D, Tucci DL (2007) Consequences of unilateral hearing loss: time dependent regulation of protein synthesis in auditory brainstem nuclei. Hear Res 233:124–134

Hutson KA, Durham D, Imig T, Tucci DL (2008) Consequences of unilateral hearing loss: cortical adjustment to unilateral deprivation. Hear Res 237:19–31

Jung TTK, Alper CM, Roberts JE, Casselbrant ML, Eriksson PO, Gravel JS, Hellstrom SO, Hunter LL, Paradise JL, Park SK, Spratley J, Tos M, Wallace I (2005) Complications and sequelae. Ann Otol Rhinol Laryngol 114:140–160

Kacelnik O, Nodal FR, Parsons CH, King AJ (2006) Training-induced plasticity of auditory localization in adult mammals. PLoS Biol 4:627–638

Keuroghlian AS, Knudsen EI (2007) Adaptive auditory plasticity in developing and adult animals. Prog Neurobiol 82:109–121

Knudsen EI, Esterly SD, Knudsen PF (1984a) Monaural occlusion alters sound localization during a sensitive period in the barn owl. J Neurosci 4:1001–1011

Knudsen EI, Knudsen PF, Esterly SD (1984b) A critical period for the recovery of sound localization accuracy following monaural occlusion in the barn owl. Journal of neuroscience 4:1012–1020

Kokko E (1974) Chronic secretory otitis media in children—clinical-study. Acta Otolaryngologica 327:7–44

Kral A, Tillein J, Heid S, Hartmann R, Klinke R (2005) Postnatal cortical development in congenital auditory deprivation. Cereb Cortex 15:552–562

Kuhl PK (2010) Brain mechanisms in early language acquisition. Neuron 67:713–727

Lupo JE, Koka K, Thornton J, Tollin DJ (2011) The effects of experimentally induced conductive hearing loss on spectral and temporal aspects of sound transmission through the ear. Hear Res 272:30–41

Maw R, Wilks J, Harvey I, Peters TJ, Golding J (1999) Early surgery compared with watchful waiting for glue ear and effect on language development in preschool children: a randomised trial. Lancet 353:960–963

McCormick DP, Baldwin CD, Klecan-Aker JS, Swank PR, Johnson DL (2001) Association of early bilateral middle ear effusion with language at age 5 years. Ambul Pediatr 1:87–90

Miccio AW, Gallagher E, Grossman CB, Yont KM, Vernon-Feagans L (2001) Influence of chronic otitis media on phonological acquisition. Clin Linguist Phon 15:47–51

Mody M, Schwartz RG, Gravel JS, Ruben RJ (1999) Speech perception and verbal memory in children with end without histories of otitis media. J Speech Lang Hear Res 42:1069–1079

Moore DR, Irvine DRF (1981) Plasticity of binaural interaction in the cat inferior colliculus. Brain Res 208:198–202

Moore DR, Hutchings ME, Meyer SE (1991) Binaural masking level differences in children with a history of otitis-media. Audiology 30:91–101

Moore DR, Hine JE, Jiang ZD, Matsuda H, Parsons CH, King AJ (1999) Conductive hearing loss produces a reversible binaural hearing impairment. J Neurosci 19:8704–8711

Nodal FR, Kacelnik O, Bajo VM, Bizley JK, Moore DR, King AJ (2010) Lesions of the auditory cortex impair azimuthal sound localization and its recalibration in ferrets. J Neurophysiol 103:1209–1225

Owen MJ, Norcross-Nechay K, Howie VM (1993) Brainstem auditory evoked potential in young children before and after tympanostomy tube placement. Int J Pediatr Otorhinolaryngol 25:105–117

Paradise JL, Dollaghan CA, Campbell TF, Feldman HM, Bernard BS, Colborn DK, Rockette HE, Janosky JE, Pitcairn DL, Sabo DL, Kurs-Lasky M, Smith CG (2000) Language, speech sound production, and cognition in three-year-old children in relation to otitis media in their first three years of life. Pediatrics 105:1119–1130

Paradise JL, Feldman HM, Campbell TF, Dollaghan CA, Colborn DK, Bernard BS, Rockette HE, Janosky JE, Pitcairn DL, Sabo DL, Kurs-Lasky M, Smith CG (2001) Effect of early or delayed insertion of tympanostomy tubes for persistent otitis media on developmental outcomes at the age of three years. N Engl J Med 344:1179–1187

Paradise JL, Feldman HM, Campbell TF, Dollaghan CA, Colborn DK, Bernard BS, Rockette HE, Janosky JE, Pitcairn DL, Sabo DL, Kurs-Lasky M, Smith CG (2003a) Early versus delayed insertion of tympanostomy tubes for persistent otitis media: developmental outcomes at the age of three years in relation to prerandomization illness patterns and hearing levels. Pediatr Infect Dis J 22:309–314

Paradise JL, Dollaghan CA, Campbell TF, Feldman HM, Bernard BS, Colborn DK, Rockette HE, Janosky JE, Pitcairn DL, Kurs-Lasky M, Sabo DL, Smith CG (2003b) Otitis media and tympanostomy tube insertion during the first three years of life: Developmental outcomes at the age of four years. Pediatrics 112:265–277

Paradise JL, Campbell TF, Dollaghan CA, Feldman HM, Bernard BS, Colborn DK, Rockette HE, Janosky JE, Pitcairn DL, Kurs-Lasky M, Sabo DL, Smith CG (2005) Developmental outcomes after early or delayed insertion of tympanostomy tubes. N Engl J Med 353:576–586

Paradise JL, Feldman HM, Campbell TF, Dollaghan CA, Rockette HE, Pitcairn DL, Smith CG, Colborn DK, Bernard BS, Kurs-Lasky M, Janosky JE, Sabo DL, O’Connor RE, Pelham WE (2007) Tympanostomy tubes and developmental outcomes at 9 to 11 years of age. N Engl J Med 356:248–261

Pearce PS, Saunders MA, Creighton DE, Sauve RS (1988) Hearing and verbal-cognitive abilities in high-risk preterm infants prone to otitis-media with effusion. J Dev Behav Pediatr 9:346–352

Pennie RA (1998) Prospective study of antibiotic prescribing for children. Can Fam Physician 44:1850–1856

Petinou KC, Schwartz RG, Gravel JS, Raphael LJ (2001) A preliminary account of phonological and morphophonological perception in young children with and without otitis media. Int J Lang Commun Disord 36:21–42

Pillsbury HC, Grose JH, Hall JW (1991) Otitis-media with effusion in children—binaural hearing before and after corrective surgery. Arch Otolaryngol Head Neck Surg 117:718–723

Popescu MV, Polley DB (2010) Monaural deprivation disrupts development of binaural selectivity in auditory midbrain and cortex. Neuron 65:718–731

Qin ZB, Wood M, Rosowski JJ (2010) Measurement of conductive hearing loss in mice. Hear Res 263:93–103

Rach GH, Zielhuis GA, Vandenbroek P (1988) The influence of chronic persistent otitis-media with effusion on language-development of 2-year-olds to 4-year-olds. Int J Pediatr Otorhinolaryngol 15:253–261

Ravicz ME, Rosowski JJ, Merchant SN (2004) Mechanisms of hearing loss resulting from middle-ear fluid. Hear Res 195:103–130

Roberts JE, Burchinal MR, Koch MA, Footo MM, Henderson FW (1988) Otitis-media in early-childhood and its relationship to later phonological development. J Speech Hear Disord 53:424–432

Roberts JE, Burchinal MR, Davis BP, Collier AM, Henderson FW (1991) Otitis-media in early-childhood and later language. J Speech Hear Res 34:1158–1168

Roberts JE, Burchinal MR, Zeisel SA, Neebe EC, Hooper SR, Roush J, Bryant D, Mundy M, Henderson FW (1998) Otitis media, the caregiving environment, and language and. Pediatrics 102:346

Roberts JE, Burchinal MR, Jackson SC, Hooper SR, Roush J, Mundy M, Neebe EC, Zeisel SA (2000) Otitis media in early childhood in relation to preschool language and school readiness skills among black children. Pediatrics 106:725–735

Roberts JE, Burchinal MR, Zeisel SA (2002) Otitis media in early childhood in relation to children’s school-age language and academic skills. Pediatrics 110:696–706

Roberts J, Hunter L, Gravel J, Rosenfeld R, Berman S, Haggard M, Hall J, Lannon C, Moore D, Vernon-Feagans L, Wallace I (2004a) Otitis media, hearing loss, and language learning: controversies and current research. J Dev Behav Pediatr 25:110–122

Roberts JE, Rosenfeld RM, Zeisel SA (2004b) Otitis media and speech and language: a meta-analysis of prospective studies. Pediatrics 113:E238–E248

Rovers MM, Straatman H, Ingels K, van der Wilt GJ, van den Broek P, Zielhuis GA (2000) The effect of ventilation tubes on language development in infants with otitis media with effusion: a randomized trial. Pediatrics 106:e42

Sanes DH, Bao SW (2009) Tuning up the developing auditory CNS. Curr Opin Neurobiol 19:188–199

Sharma M, Purdy SC, Newall P, Wheldall K, Beaman R, Dillon H (2006) Electrophysiological and behavioral evidence of auditory processing deficits in children with reading disorder. Clin Neurophysiol 117:1130–1144

Shriberg LD, Friel-Patti S, Flipsen P, Brown RL (2000) Otitis media, fluctuant hearing loss, and speech-language outcomes: a preliminary structural equation model. J Speech Lang Hear Res 43:100–120

Silverman MS, Clopton BM (1977) Plasticity of binaural interaction.1. Effect of early auditory deprivation. J Neurophysiol 40:1266–1274

Smith ZDJ, Gray L, Rubel EW (1983) Afferent influences on brain-stem auditory nuclei of the chicken—N-laminaris dendritic length following monaural conductive hearing-loss. J Comp Neurol 220:199–205

Takesian AE, Kotak VC, Sanes DH (2010) Presynaptic GABA(B) receptors regulate experience-dependent development of inhibitory short-term plasticity. J Neurosci 30:2716–2727

Teele DW, Klein JO, Rosner BA (1984) Otitis-media with effusion during the 1st 3 years of life and development of speech and language. Pediatrics 74:282–287

Tucci DL, Cant NB, Durham D (1999) Conductive hearing loss results in a decrease in central auditory system activity in the young gerbil. Laryngoscope 109:1359–1371

Vernon-Feagans L, Hurley M, Yont K (2002) The effect of otitis media and daycare quality on mother/child bookreading and language use at 48 months of age. J Appl Dev Psychol 23:113–133

Vernon-Feagans L, Hurley MM, Yont KM, Wamboldt PM, Kolak A (2007) Quality of childcare and otitis media: relationship to children’s language during naturalistic interactions at 18, 24, and 36 months. J Appl Dev Psychol 28:115–133

Wallace IF, McCarton CM, Bernstein RS, Gravel JS, Stapells DR, Ruben RJ (1988) Otitis-media, auditory-sensitivity, and language outcomes at one year. Laryngoscope 98:64–70

Webber AL, Wood J (2005) Amblyopia: prevalence, natural history, functional effects and treatment. Clin Exp Optom 88:365–375

Webster DB, Webster M (1977) Neonatal sound deprivation affects brain-stem auditory nuclei. Arch Otolaryngol Head Neck Surg 103:392–396

Webster DB, Webster M (1979) Effects of neonatal conductive hearing-loss on brain-stem auditory nuclei. Ann Otol Rhinol Laryngol 88:684–688

Wilmington D, Gray L, Jahrsdoerfer R (1994) Binaural processing after corrected congenital unilateral conductive hearing-loss. Hear Res 74:99–114

Xu H, Kotak VC, Sanes DH (2007) Conductive hearing loss disrupts synaptic and spike adaptation in developing auditory cortex. J Neurosci 27:9417–9426

Zumach A, Gerrits E, Chenault M, Anteunis L (2010) Long-term effects of early-life otitis media on language development. J Speech Lang Hear Res 53:34–43

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Whitton, J.P., Polley, D.B. Evaluating the Perceptual and Pathophysiological Consequences of Auditory Deprivation in Early Postnatal Life: A Comparison of Basic and Clinical Studies. JARO 12, 535–547 (2011). https://doi.org/10.1007/s10162-011-0271-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10162-011-0271-6