Abstract

This review focuses on the “real world” implications of infection with HIV/AIDS from a neuropsychological perspective. Relevant literature is reviewed which examines the relationships between HIV-associated neuropsychological impairment and employment, driving, medication adherence, mood, fatigue, and interpersonal functioning. Specifically, the relative contributions of medical, cognitive, psychosocial, and psychiatric issues on whether someone with HIV/AIDS will be able to return to work, adhere to a complicated medication regimen, or safely drive a vehicle will be discussed. Methodological issues that arise in the context of measuring medication adherence or driving capacity are also explored. Finally, the impact of HIV/AIDS on mood state, fatigue, and interpersonal relationships are addressed, with particular emphasis on how these variables interact with cognition and independent functioning. The purpose of this review is to integrate neuropsychological findings with their real world correlates of functional behavior in the HIV/AIDS population.

Similar content being viewed by others

Introduction

Starting as an untreatable illness with a dire prognosis to a more chronic illness that may have neuropsychiatric manifestations, HIV-1 has afforded researchers the opportunity to examine a variety of “real world” issues in persons with and without cognitive impairment. Few other examples of “the Lazarus Syndrome” exist in the history of medicine in which, before the introduction of highly active antiretroviral therapy (HAART), affected persons usually stopped work and began to confront their own demise. After the introduction of HAART, most HIV infected individuals experienced a remarkable turn around in their medical status, and soon began to live life again as persons with a chronic, but perhaps nonfatal, illness. With this came a host of issues including the need to manage a complex therapy regimen, consider returning to work, and engage in the complex tasks of everyday life including driving and independent household management including management of their finances. The complication for those infected is that HIV represents a neuropsychiatric illness, with a significant proportion sustaining compromise of the central nervous system, with varying degrees of associated cognitive impairment. This review will focus upon the “real world” implications of infection with HIV from a neuropsychological perspective and new insights that can be gained in this arena regarding the relationship between neuropsychological functioning and real world capabilities.

Work and Employment in Individuals with HIV/AIDS

Since the advent of highly active antiretroviral therapies, many individuals living with HIV/AIDS have experienced a dramatic turnaround in their health status and their potential for a long life with a chronic illness. Additionally, newly infected but treated persons will not necessarily become disabled and stop working, but rather they will be challenged to persist and maintain employment in the context of a chronic illness with neuropsychiatric complications. Unique issues are raised for persons who are considering re-entering the workplace, perhaps after years of unemployment and/or disability, and who hope to maintain their new employment in the context of new health-related challenges.

There have now been a number of studies examining the neuropsychological factors which influence whether an individual will stop working in the context of HIV infection and whether he or she will successfully return to work (and maintain that position) following a period of unemployment. Although our discussion will primarily focus on the neuropsychological factors related to employment, it is also important to consider how these neuropsychological factors function within the larger context of psychosocial, medical, and psychiatric issues which may also impact employment in this population.

Unemployment Patterns in HIV-related Disease

Epidemiological research has shown that returning to work after having been identified as disabled is a difficult and complex process, and success in returning to work for various clinical populations is often times disappointing. As of 2005, there was a 43% differential in employment rates between healthy adults and those with disabilities, such that only 35% of adults with disabilities were employed while 78% of the general adult population was employed (Humphrey 2004). Individuals living with HIV/AIDS have followed a similar pattern. For example, a mail survey of almost 2000 residents living with HIV/AIDS (representing a 37% response rate) who had case managers at AIDS organizations in the Los Angeles area found that only 20% were working full time, 15% were working part-time, and 65% were not working at all (Brooks et al. 1999). This study did not address whether the individuals who were unemployed stopped working as a result of issues related to HIV or whether these results reflect continuous unemployment. In a three-year longitudinal study of 141 gay men, Rabkin et al. (2004) found that 20% were continuously employed full-time, 9% were continuously employed part-time, and 40% were continuously unemployed. Only 13% returned to work or increased their hours from part-time to full-time during the course of the study. Other studies have found that employment status for HIV+ adults tends to fluctuate over the course of their illness as a function of their medical status (Blalock et al. 2002), and those who do return to work typically do so at their previous occupational level (van Gorp et al. 2007).

This research indicates that only a small percentage of individuals with HIV/AIDS remain continuously employed, that few who stop working actually return to work, and that longer durations of unemployment for HIV+ individuals are associated with a lower probability of returning to work (Tyler 2002; van Gorp et al. 2007; Yasuda et al. 2001). This research illustrates that returning to work is a long and arduous process which many individuals who have been out of work for extended periods of time are not able to navigate. Therefore, it is important to identify the potential barriers and/or facilitators and incentives to returning to work in order to increase re-employment and/or encourage continuity of employment in this population.

Neuropsychological Factors Related to Employment

Both cross-sectional (Heaton et al. 1994; van Gorp et al. 1999) and longitudinal studies (Albert et al. 1995; van Gorp et al. 2007) have showed that unemployment is significantly associated with neuropsychological impairment. Heaton et al. (1994) found that among HIV+ individuals, only 7.9% of those with no neuropsychological impairment were unemployed, while 17.5% of those with neuropsychological impairment were unemployed. This finding controlled for potentially disabling medical symptoms and remained significant even when controlling for mild to moderate levels of depression. Unemployment rates increased as degree of neuropsychological impairment increased. For those with neuropsychological impairment who continued to work, they were five times more likely to report diminished work ability than those without neuropsychological impairment. This study confirmed that neuropsychological deficits influence vocational functioning in the HIV population, even if only mild impairments are evident.

The utility of neuropsychological testing in predicting employment status has now been well documented, both in HIV-infected patients and other clinical populations. The next logical step in understanding the relationship between neuropsychological impairment and employment status is to begin to study which neuropsychological domains predict success in returning to work. Having an understanding of the specific cognitive domains at play can then inform treatment interventions and remediation. In a meta-analytic review inclusive of a variety of clinical populations (epilepsy, TBI, HIV, and other various disorders), Kalechstein et al. (2003) found that although diminished performance across all neurocognitive domains was associated with a greater likelihood of unemployment, intellectual functioning, executive functioning, and memory showed the strongest relationship with employment status.

Executive functioning and memory have also been to shown to be areas of specific neuropsychological impairment that may be related to employment status in the HIV/AIDS population. In a group of 130 predominantly symptomatic individuals with HIV-1 infection, van Gorp et al. (1999) examined differences in neuropsychological status between those who were classified as employed (either full or part time) or unemployed at study entry. These authors found that twice as many unemployed participants were neuropsychological impaired (22%) when compared to those who were employed (11%). Logistic regression analyses confirmed that degree of neuropsychological impairment differentiated between employed and unemployed individuals even when controlling for age, CD4 count, and physical limitations. The specific neuropsychological domains which emerged as differentiating employed from unemployed individuals included memory (California Verbal Learning Test), response inhibition (Stroop-Color Word Test), and cognitive set shifting (Trails B). The overall predication rate for this regression equation was 73 percent.

Consistent with these results, Rabkin et al. (2004) also found that unemployed men performed more poorly than employed men on tasks of memory, executive functioning, and psychomotor speed when controlling for age, CD4 cell count, and physical limitations. Furthermore, only performance on executive functioning measures (namely, Trail Making B and the Stroop Color Word Test) predicted total number of hours worked over the course of a three-year period, although their effects were not as strong as the effects of receipt of benefits and depression history.

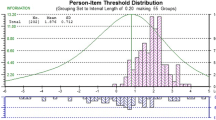

In a two-year longitudinal study, van Gorp et al. (2007) examined 118 men and women with HIV/AIDS who had stopped working after being diagnosed as HIV+ but who were actively looking for employment. All study participants had a history of steady employment (as defined by a period of paid employment for 12 months). These authors found that only one measure from a large battery of tests stood out a robust predictor of return to work—an index of learning and memory from the California Verbal Learning Test (CVLT; total recall). In a regression analysis examining the relative contributions of age, duration of unemployment, CDC classification (i.e. having an AIDS diagnosis or note), and CVLT total score, only the latter emerged as a uniquely significant predictor of return to work. In fact, these authors found that the likelihood of finding employment doubled from the lowest to highest baseline scores on this measure, such that those with the highest scores on the CVLT had a 70% chance of finding employment (see Fig. 1). Motor speed was also a significant, although less consistent, predictor of return to work in this sample. IQ and basic academic abilities (reading, spelling, and mathematics) did not significantly predict return to work. Analysis of the longitudinal neuropsychological data revealed that none of the post-baseline assessment significantly predicted return to work, suggesting that the repeated measures data over the follow up visits did not improve the prediction of job outcomes after baseline values were considered.

CVLT Baseline Predicts Finding Employment. Note: Adapted from van Gorp, W., Rabkin, J.G., Ferrando, S.J., Mintz, J., Ryan, E., Borkowski,T., & Mcelhiney, M. (2007). Neuropsychiatric predictors of return to work in HIV/AIDS. Journal of the International Neuropsychological Society, 13, 80-89 (reprinted with permission)

Taken together, three major findings emerge from this literature. One, HIV+ individuals who are unemployed are more likely to have a higher prevalence of cognitive impairment than employed individuals. Two, neuropsychological factors significantly differentiated employed from unemployed individuals, even when controlling for potential confounding variables, such as physical limitations, CD4, or age. Third, the specific patterns of cognitive impairment that are associated with employment status or returning to work are learning and executive functioning. Taken together, this research demonstrates the ecological validity of neuropsychological functioning in the context of employment, and therefore, neuropsychological evaluations can be viewed as a useful tool for predicting who may successfully return to work and for highlighting areas for potential cognitive remediation.

Neuropsychological Factors Related to Persistence of Work

The majority of literature examining the neuropsychological factors related to employment in individuals with HIV/AIDS consists primarily of either cross-sectional designs which examine difference between employed and unemployed participants, or longitudinal designs which investigate variables that predict who will return to work after a period of disability. However, with the advent of HAART, many individuals who become HIV positive are able to control their symptoms and potentially maintain their employment status, without having to endure a period of disability. Considering that research has shown many individuals who stop working often do not return to work, identifying factors which increase the probability that someone will remain employed, without enduring a period of disability, could significantly lower unemployment rates and improve quality of life for this population. To date, there has been very little research in this area.

van Gorp (2008) examined variables which influence work persistence in a sample of 118 HIV+ men and women originally used in their longitudinal study (van Gorp et al. 2007). They defined “persistence” as those individuals who maintained employment for 60% of the time after finding work during the course of the study. Twenty-eight out of 118 participants met criteria for this definition of persistence. Chi-square analysis demonstrated a greater proportion of neuropsychologically impaired individuals among those who were not persistent in maintaining work (29%) compared to those who were able to achieve persistent employment (13%). Furthermore, only CVLT total scores significantly predicted impairment status, whereas measures of executive functioning, motor speed, response inhibition, mental flexibility, and visual memory did not predict neuropsychological impairment.

This study represents one step towards understanding how neuropsychological functioning may influence whether a person is able to maintain their employment after finding a job. Future research might expand this focus and examine whether neuropsychological functioning might influence whether someone is able to persist in their current level of employment without enduring a period of short-term or long-term disability.

Medical Factors and Employment

As stated earlier, the cognitive factors which influence employment status in individuals with HIV/AIDS must be understood in context of the other myriad factors which influence whether an individual is able to maintain employment. Medical factors are particularly salient to individuals with HIV/AIDS, although, currently, many people living with HIV/AIDS have less risk of having a serious opportunistic infection such as Kaposi’s Sarcoma or Pneumocystis Carinaii Pneumonia than in the past due to current antiretroviral therapies. However, other distressing physical symptoms still occur that can no doubt impact their ability to function at work. For example, neuropathy, fatigue, diarrhea, and the accompanying distress associated with these symptoms can restrict the type of work that is possible and may discourage affected individuals from returning to work at all. Findings regarding whether disease markers of HIV/AIDS predict employment status have been mixed. Blalock et al. (2002) found that in a cross-sectional study of 200 men and women with HIV/AIDS who attended an urban public outpatient clinic, those who were unemployed (60% of the sample) were significantly more immunocompromised and had lower mean CD4 counts than those who were employed. However, other studies have not found that CD4 cell count or viral load predict unemployment status (Rabkin et al. 2004; Vitry-Henry et al. 1999). Overall, the findings regarding the role of medical complications and physical symptoms in predicting RTW are mixed, suggesting that there are other variables at play here, aside from health status, which may influence someone’s decision to return to work. These variables likely include psychosocial and neuropsychiatric factors.

Psychosocial Factors and Employment

As a result of increasing unemployment in individuals living with HIV/AIDS, individuals with HIV who are disabled often receive some form of government disability income such as Social Security Disability Insurance (SSDI) or Supplemental Security Income (SSI). Fear of losing benefits, unintended disclosure of HIV status, discrimination concerns, job skills concerns, need for workplace accommodations, and fear of having to compete with younger, healthier applicants have been identified as factors which influence the decision to return to work for HIV infected individuals (DiClementi et al. 2004; Ferrier and Lavis 2003; Martin et al. 2003). The fear of losing government funded benefits, in particular, has been consistently linked to employment status across various studies, such that those who are receiving SSD tend to work less than those without SSD (Rabkin et al. 2004; Lem et al. 1995; Ferrier and Lavis 2003). This finding is not surprising when one considers that many of the jobs accessible to HIV+ patients do not include health insurance, which is important to their continued health (van Gorp et al. 2007). Therefore, the decision to relinquish government benefits and pursue employment clearly has high stakes for HIV+ patients and can understandably result in anxiety and uncertainty, and patients living with HIV/AIDS must weigh the incremental benefits of returning to work against the associated risks.

Consideration of gender is also salient to the discussion of employment in the HIV/AIDS population, as women are more likely than men to lose employment in the formal sector following a seropositive diagnosis (Dray-Spira et al. 2008). In a sample of HIV-infected men and women living in France, Dray-Spira and colleagues (2008) found that disease severity and work-place discrimination were associated with increased job loss for women and individuals with less education, but not for men or individuals with higher levels of education. These findings suggest that persons in more disadvantaged socioeconomic positions, such as women, are at higher risk for suffering consequences of their disease status, particularly with regard to their ability to maintain employment.

Men with HIV/AIDS also tend to receive more Social Security and Disability Income than women with HIVAIDS, purportedly because men have earned more money from their past employment and have more extensive work histories. Conversely, women with HIV/AIDS are more likely than men with HIV/AIDS to receive Social Security Income, which carries with it more financial and employment restrictions when returning to work than SSDI (Razzano et al. 2006). Therefore, women who are primary caregivers of children may be more reluctant to risk relinquishing government benefits by attempting to return to work, as this change in economic stability could have a significant impact on their ability to care for their families. Research examining the effect of gender on return to work in this population is limited, as the demographic distribution of HIV infection in many frequently studied urban areas (e.g. New York City, Los Angeles) generally constitutes a greater percentage of men than women.

Despite the barriers that exist in returning to work, maintaining some level of employment can certainly bring many benefits, beyond simply increasing income. These benefits include a sense of accomplishment, social engagement, a structure to day to day routines, a sense of identity, and higher perceived quality of life (Blalock et al. 2002; McElhiney et al. 2000). Medical providers should consider the benefits of working for patients with HIV/AIDS and should recognize that workplace re-entry following a period of unemployment is not easily accomplished by most patients. Instead, seeking workplace accommodations for health reasons may be a better option for helping patients avoid going on disability. Overall, this research highlights important mediating factors, both positive and negative, that come into play when deciding if and when to return to work and may represent important areas in which health care professionals can intervene to increase likelihood and success of return to work.

Psychiatric Factors and Employment

Prevalence rates for lifetime major depression are relatively high in HIV+ individuals (Ciesla and Roberts 2001), and higher unemployment rates are associated with higher rates of depression and suicidal ideation (Lyketsos et al. 1996; Kelly et al. 1998). In fact, at least one study showed that even those individuals with HIV/AIDS who have a history of depression, but no current depression, tend to work less than those without such a history (Rabkin et al. 2004). Furthermore, depression has been found to interfere with the return to work, even in patients who are medically stable (Heaton et al. 1978).

The correlation between unemployment status and higher rates of depression has been well documented. However, the causal relationship between depressive symptoms and employment status is less clear. Some research has shown that treatment of depression promoted improved work performance (Mintz et al. 1992), although work restoration took longer than symptom remission. Therefore, this study suggests at least some degree of causality between remitting depression and increasing work ability. In a longitudinal study over a 2 year period, van Gorp et al. (2007) found that in their sample of 118 HIV+ individuals seeking to return to work, as described above, those who returned to some form of work reported a higher quality of life satisfaction and enjoyment than those who did not return to work. However, baseline depression did not predict whether someone returned to work, although depression levels after finding work were significantly improved in those finding employment.

Although most research suggests that individuals who are unemployed tend to report higher levels of depression, one study found that the presence of a mental health disorder may increase the likelihood of returning to work by increasing the likelihood that a patient will access helpful support services. In a primarily Caucasian male sample (73.5% Caucasian, 87% male), DiClementi et al. (2004) retrospectively compared HIV infected individuals with various substance abuse and mental health diagnoses who had returned to work to those who had not returned to work. Although they found that injection drug use and other drug/alcohol use disorders were associated with not returning to work, they also found that those who returned to work actually had a higher number of psychiatric diagnoses (non-substance abuse related) than those who did not return to work. These authors note that those with non-substance-use mental health diagnoses were recruited for their study through mental health services, and therefore they conclude that accessing mental health treatment may facilitate returning to work.

Most research suggests that unemployment is associated with higher rates of depression in individuals with HIV/AIDS. However, the degree to which treatment of existing depression results in increased employment and return to work is less clear. There has been at least some evidence that access to mental health treatment may be an important step for people who are considering returning to work. More research is needed to better understand how mental health treatment can facilitate return to work in HIV/AIDS patients with various psychiatric diagnoses.

Summary of Work and Employment Individuals

Research has identified several factors which influence whether an individual will stop working in the context of HIV infection and whether he or she will successfully return to work (and maintain that position) following a period of unemployment. Specifically, unemployment has been associated with neuropsychological impairment, particularly in the areas of memory and executive functioning, even when controlling for various medical factors. New learning has also been associated with an individuals’ ability to maintain new employment following a period of disability, although research is lacking in this area. It is important to consider these neuropsychological factors in the context of other salient issues related to employment in this population, such as medical, psychosocial, and psychiatric issues. Future research is needed to expand the focus from returning to work to include the persistence of work after HIV infection and examine whether psychological, neuropsychological, psychosocial, or medical factors influence whether someone will be able to persist in their current employment without enduring a period of short-term or long-term disability.

Medication Adherence

Medication advances in the treatment of HIV infection, including the introduction of highly active antiretroviral treatment (HAART), have been shown to significantly improve markers of immune function such as CD4 count and HIV viral load (Catz et al. 2000; Clerici et al. 2002), as well as bolster patients’ processing speed, motor functioning, memory, and general cognition (Brouwers et al. 1997; Martin et al. 1999; Suarez et al. 2001). These advances have been accompanied by population-level reductions in the incidence of HIV-associated dementia (HAD) (Ances and Ellis 2007). However, 40–50% of HIV-infected individuals fail to maintain the consistently high levels of adherence that are needed to successfully reconstitute or sustain desirable levels of immune functioning (Gifford et al. 2000; Hinkin et al. 2004; Paterson et al. 2000).

As summarized in Table 1, a number of factors have been shown to affect adherence to medication in HIV-infected populations, including neurocognitive impairment (Barclay et al. 2007; Hinkin et al. 2002a, b, 2004; ; Waldrop-Valverde et al. 2006), patient age (Hinkin et al. 2002a, b, 2004), regimen complexity (Hinkin et al. 2002a, b), health beliefs and treatment self-efficacy (Barclay et al. 2007; Catz et al. 2000; Deschamps et al. 2004; Gifford et al. 2000), coping strategies (Deschamps et al. 2004; Weaver et al. 2005), social support (Catz et al. 2000), mood and anxiety disorders (Paterson et al. 2000; Tucker et al. 2003), and substance/alcohol use (Hinkin et al. 2007; Hinkin et al. 2004; Palepu et al. 2004; Parsons et al. 2007; Tucker et al. 2003). Following a description of common methods used to measure medication adherence, we will focus our discussion upon neuropsychological correlates of adherence and other predictors that are most relevant to neuropsychological functioning.

Measurement of Medication Adherence

The most common method of measuring adherence to medication involves direct inquiry (via interview or questionnaire) into patients’ level of compliance to antiretroviral medication regimens over a given timeframe. Unfortunately, for many patients self-report of adherence has been shown to have limited accuracy. In particular, self-report of adherence has been found to be most discrepant from objective measures (generally, overestimating adherence) for individuals with cognitive impairment and for those whose adherence has been relatively poor (Levine et al. 2006a, b; Reinhard et al. 2007) . Likewise, self-report has been shown to overestimate adherence by approximately 10–20% for most patients reporting perfect or near-perfect adherence (Arnsten et al. 2001; Levine et al. 2006a, b). The timeframe for which patients are instructed to provide self-reports of adherence may also play an important role. For example, self-report of adherence was relatively more consistent with objective measures for shorter periods (i.e., 4 days) than for longer timeframes (i.e., 4 weeks) (Levine et al. 2006a, b).

Among the objective measures of adherence, the most straightforward method entails counting the number of pills remaining in the pill bottle during a patient’s follow-up visit. By calculating the number of pills which have been removed and comparing it to the number of scheduled dosing events, inferences can be made about how many scheduled doses were “missed” by the patient. However, the pill-counting method has a number of limitations. First, this method assumes that missing doses were consumed rather than misplaced or discarded. Other augmentative methods, including unannounced pill counts, conducted in-person or by telephone, may be helpful in reducing the likelihood of attempts to simulate proper adherence by counting and discarding pills in advance (Bangsberg 2006). Second, it is impossible to know the degree to which mistakes had been made in taking each dose as scheduled, an issue which may be particularly relevant for more cognitively-impaired individuals.

Similar to pill counts, pharmacy refill records may serve as an objective proxy measure of adherence, with the assumption that the degree to which individuals refill a medication on schedule reflects their overall conscientiousness regarding adherence to that medication. Additionally, the lower salience of this form of monitoring (relative to a pill count) and the inconvenience of making unneeded trips to the pharmacy may discourage patients from attempting to simulate higher-than-actual levels of adherence. However, methods of measuring adherence using pharmacy records retain many of the other limitations of pill-counting, and the relatively coarse nature of refill measurement could also limit its sensitivity over shorter time frames.

Objective methods utilizing electronic monitoring, such as the Medication Event Monitoring System (MEMS; Aprex Corp., Union City, CA) have been developed to address some of the limitations of more traditional techniques of adherence measurement. The MEMS cap utilizes a microprocessor in the pill bottle cap to automatically record date, time and duration of each bottle opening. These data are typically downloaded from the MEMS cap by the investigator at the patients’ next scheduled follow-up visit. Although MEMS caps may provide detailed, moment-to-moment data regarding medication usage, it is important to note that this technology records pill bottle openings only, and remains incapable of monitoring the actual ingestion of medication. Therefore, MEMS may underestimate adherence if individuals engage in “pocket dosing”, e.g., removing multiple doses from a bottle at one time with the intent of taking them later (Hinkin et al. 2004). While investigators using MEMS caps are well-advised to instruct participants not to remove pills except for immediate use, it is possible that some individuals may nevertheless remain tempted to do so because of issues related to portability (e.g., the relatively bulk of the MEMS cap bottle) and possible social embarrassment or stigma.

Studies have shown that estimates of adherence based on MEMS, which may underestimate adherence at times, tend to be lower than those based on self-report or pill count, which may overestimate adherence (Levine et al. 2006a, b; Liu et al. 2001). Overall, MEMS has been shown to be more related to virologic suppression than self-report (Arnsten et al. 2001). However, each method of measurement has limitations, and the combination of multiple objective and self-report methods of adherence measurement (when possible) should yield improved measurement overall relative to a single-method approach (Liu et al. 2001).

Neurocognitive Correlates of Medication Adherence

As mentioned previously, highly active antiretroviral treatment (HAART) has been shown to bolster or help preserve cognitive functioning, particularly in the domains of processing speed, motor functioning, and memory (Brouwers et al. 1997; Martin et al. 1999; Suarez et al. 2001). To the degree that these positive effects on cognition are dependent upon patients’ antiretroviral medication adherence, associations between adherence and cognition should be expected, at least over longer time periods. Consistent with these expectations, the domains of cognitive impairment which have been most consistently associated with adherence, executive functions, psychomotor speed, attention, and memory, are also among the cognitive domains most frequently affected by HIV-related neurodegeneration (Hinkin et al. 2002a, b, 2004; Waldrop-Valverde et al. 2006).

However, evidence suggests that the adherence-cognition relationship is bidirectional, with additional decrements in adherence resulting from certain types of cognitive impairment. For example, Albert et al. (1999) found that deficits in memory, executive functioning, and psychomotor speed were associated with poorer performance on a laboratory-based medication management task and poorer self-reported medication adherence over a 3-day period. Additionally, Hinkin et al. (2004) found that individuals classified as neurocognitively impaired were 2.5 times more likely to demonstrate poor medication adherence. In terms of individual cognitive sub-domains, deficits in executive functioning, attention/working memory, and verbal memory were the strongest predictors of poor adherence.

Aging and Medication Adherence

As the mortality rate of HIV/AIDS has declined, the HIV-infected population has begun to age, with approximately 24% of individuals living with HIV/AIDS now aged 50 years or older (Centers for Disease Control and Prevention 2007). Patient age has also been associated with adherence, with individuals over age 50 demonstrating significantly better adherence than those under 50 (Hinkin et al. 2002a, b, 2004). For example, Hinkin et al. (2004) found that older patients were three times more likely to achieve “good” (≥95%) adherence than their younger counterparts.

Nevertheless, accumulating evidence suggests that older adults are also at a substantially increased risk for HIV-associated cognitive decline and dementia relative to their younger counterparts (Baldeweg et al. 1998; Becker et al. 2004; Valcour et al. 2004). Although it could be hypothesized that older adults have sustained greater HIV-related neuropathology by virtue of longer overall time since seroconversion, duration of HIV infection has not shown a clear relationship to risk for cognitive decline (Valcour et al. 2004).

Examining these issues more closely, Ettenhofer et al. (2009) found an age interaction in the cognition-adherence relationship, with older HIV-positive adults demonstrating a particular vulnerability to neurocognitive dysfunction under conditions of sub-optimal HAART adherence. Likewise, older adults with neurocognitive impairment (particularly executive and motor deficits) were at increased risk of sub-optimal adherence to medication (see Fig. 2). In contrast, younger HIV-positive individuals tended to have relatively poor medication adherence regardless of level of cognitive function. The causes of these apparent age-related vulnerabilities remain unclear, but may be related to an increase in blood-brain barrier permeability with advancing age (Bartels et al. 2008; Shah and Mooradian 1997) and/or harmful functional affects of the age-related declines in cognition that would be expected to occur even in the absence of identifiable brain pathology (Buckner 2004; Salthouse et al. 2003).

Cognition and Medication Adherence among Younger and Older HIV+ Adults. Note: Mem. = Memory; Attent. = Attention; Exec. = Executive; Qual. = Qualitative self-report; 30-Day = 30-Day Self-Report; 1-Day = 1-Day Self-Report. Standardized values shown. Adapted from Ettenhofer, M. L., Hinkin, C. H., Castellon, S. A., Durvasula, R., Ullman, J., Lam, M., Myers, H., Wright, M. J., & Foley, J. (in press). Aging, neurocognition, and medication adherence in HIV infection. American Journal of Geriatric Psychiatry

Substance Use and Medication Adherence

Substance use is relatively common among individuals with HIV/AIDS, with prevalence estimated from one nationally-representative sample at approximately 40% for use of illicit drugs other than marijuana, 12% for drug dependence, and 18% for “heavy” or “frequent heavy” alcohol consumption (Bing et al. 2001). Substance use among HIV-positive individuals has been consistently associated with poorer medication adherence (Halkitis et al. 2008; Hinkin et al. 2007; Hinkin et al. 2004; Palepu et al. 2004; Parsons et al. 2007; Tucker et al. 2003). For example, in a longitudinal study utilizing MEMS caps to track adherence and urinalysis to track substance use over a period of 6 months, Hinkin et al. (2007) found that MEMS adherence was significantly worse among individuals who tested positive for drugs \( \left( {\underline{\text{M}} ~ = ~63\% } \right) \) compared to those who did not \( \left( {\underline{\text{M}} ~ = ~79\% } \right) \). Additionally, results of logistic regression demonstrated that drug-using HIV+ individuals had a greater than four-fold risk of poor adherence than non-drug-users. Regarding specific classes of substances, Palepu et al. (2004) found a greater likelihood of poor self-reported medication adherence over a 30-day period for those reporting use of cocaine (OR = 2.2; 95% CI: 1.2 to 3.8), amphetamines (OR = 2.3; 95% CI: 1.2 to 4.2), sedatives (OR = 1.6; 95% CI: 1.0 to 2.4), and cannabis (OR = 1.7; 95% CI: 1.2 to 2.3). Alcohol use was also associated with increased likelihood of poor adherence for moderate drinkers (OR = 1.6; 95% CI: 1.3 to 2.0) and heavy drinkers (OR = 1.7; 95% CI: 1.3 to 2.3), but especially for frequent heavy drinkers (OR = 2.7; 95% CI: 1.7 to 4.5).

A number of potential mechanisms exist whereby substance use could interfere with medication adherence. Drug effects could alter elements of daily routine (i.e., meals or sleep) that are often an important time-related component of an individual’s medication regimen, thus disrupting opportunities or cues for antiretroviral dosing. Additional difficulties with proper medication adherence could arise from cognitive deficits related to long-term substance use or the acute effects of intoxication. Alternately, it is possible that one or more stable traits could predispose some individuals to both substance use and poor medication adherence.

Thus far, evidence suggests that the link between substance use and medication adherence in HIV/AIDS may be predominantly related to “state” rather than “trait” factors. Among individuals who tested positive for drugs at some point during the 6-month study, Hinkin et al. (2007) found that MEMS adherence was substantially higher during the 3 days prior to a negative urinalysis \( \left( {\underline{\text{M}} = 71.7\% } \right) \) than during equivalent periods prior to a positive urinalysis \( \left( {\underline{\text{M}} = 51.3\% } \right) \). This fluctuation in adherence may be related to transient disruptions of cognition. As shown by Levine et al. (2006a, b), HIV-positive individuals who test positive for recent stimulant use demonstrated deficits in sustained visual attention, despite similar levels of overall cognitive functioning as non-drug using controls.

Regimen Complexity and Medication Adherence

Although efforts have been made in recent years to simplify drug treatments for HIV/AIDS, many individuals’ antiretroviral medication regimens remain complex. For example, patients may be asked to adhere to dietary restrictions, or take multiple medications with variable dosing schedules. As might be expected, research has demonstrated that individuals with more complex antiretroviral regimens (i.e., those requiring greater dose events per day) tend to demonstrate lower levels of adherence overall (Hinkin et al. 2002a, b). However, when patients were divided in this study into cognitively impaired and cognitively unimpaired groups based upon overall cognitive performance, only the cognitively impaired group demonstrated decrements in medication adherence with greater regimen complexity. In particular, analyses investigating this interaction by cognitive sub-domain demonstrated that individuals with impairments in executive functioning and attention/working memory appear to have the most difficulty managing complex medication regimens.

Summary of Medication Adherence

Research in HIV-positive populations has demonstrated powerful links between neurocognitive impairment and poor adherence to antiretroviral medication regimens that appear to be bidirectional (Ettenhofer et al. 2009; Hinkin et al. 2002a, b, 2004; Waldrop-Valverde et al. 2006). Additionally, a number of factors such as advanced patient age (Ettenhofer et al. 2009; Hinkin et al. 2004), substance problems (Hinkin et al. 2007), and complex medication regimens (Hinkin et al. 2002a, b) have been shown to magnify these risks. Overall, impairments in executive functioning, attention/working memory, verbal memory, and motor functioning appear to be most relevant to medication adherence in HIV-positive populations. In terms of methods for assessing medication adherence, objective methods such as electronic monitoring offer a number of advantages, but a combination of multiple objective and self-report methods of adherence measurement is preferable to a single-method approach (Liu et al. 2001). Additional research investigating links between cognition and medication adherence will be crucial to the continued development of methods to enhance medication adherence and minimize HIV-related neurodegeneration.

Financial Management

Although less extensively studied than medication management and adherence, the “real world” tasks of organizing household finances, managing daily monetary transactions, and shopping have also been examined in the HIV/AIDS population. As expected, declines in these areas of functional ability are common in the context of neuropsychological impairment secondary to HIV/AIDS. Assessment of money management and shopping has historically been measured via self-report. For example, the Lawton and Brody (1969) IADL scale, originally targeted for use in the aging population, has been widely validated for use in HIV/AIDS population (Heaton et al. 2004; Sadek et al. 2007). This scale specifically assesses Financial Management and Shopping, as well as a wide range of other functional domains (e.g. Home Repair, Medication Management, Laundry, Transportation, Comprehension of Reading/TV materials, Housekeeping, Cooking, Bathing, Dressing, and Telephone Use). Individuals are asked to rate current level of independence and highest previous level of independence. This discrepancy in scores can then be used to determine if there has been a decline in independent daily living skills, and an estimate of overall dependency can be obtained.

More recently, laboratory procedures have been developed and/or adapted to directly assess financial management skills in the HIV/AIDS population. For example, Heaton et al. (2004) assessed shopping and money management in a sample of 267 HIV-infected adults using the Financial Skills (e.g. calculating currency, balancing a checkbook) and Shopping (e.g. selecting items from a previously presented grocery list) measures from the Direct Assessment of Functional Status instrument (DAFS, Lowenstein and Bates 1992). Although the DAFS was originally developed for use with elderly, demented individuals, these subtests were found to be valid instruments for assessing financial management and shopping in the HIV population and were highly correlated with overall neuropsychological impairment.

Heaton and colleagues (2004) also introduced a novel measure for assessing household money management entitled Advanced Finances, which required individuals to pay fictitious bills and manage a fictitious checkbook. The participants were provided with blank checks, a checkbook register, a check to deposit, deposit slips, three bills to pay, and a calculator. They were required to pay each bill, determine their resulting checkbook balance, and pay as much of their credit card bill as possible while leaving a minimum of $100 in their checking account. Participants were then awarded points for successfully completing each of these steps (i.e. correctly paying a bill, filling out deposit slip, maintaining the minimum balance). This task took approximately 10 min and a total of 13 points were possible. When collapsed with the Financial Skills task from the DAFS, this composite task yielded a wide range of performance amongst their sample of HIV-infected adults and demonstrated good internal reliability (Chronbach’s alpha = .82) (Heaton et al. 2004). This measure also demonstrated good concurrent validity, such that higher rates of failure on this measure were associated with greater neuropsychological impairment, particularly in the domain of executive functioning.

Consistent with studies of other functional abilities (working, medication adherence, etc.), declines in neuropsychological performance are related to impairments in financial management and shopping. In the study referenced above, Heaton and colleagues (2004) evaluated the relationship between HIV-associated neuropsychological impairment and a variety of functional or “real world” domains, including money management and shopping, using some of the laboratory measures described above (e.g. DAFS, Advanced Finances, and Lawton and Brody IADL scale). Compared to neuropsychologically normal participants, those with neuropsychological impairment performed significantly worse on all laboratory measures of everyday functioning. In fact, out of a wide range of functional domains (e.g. work assessment, finances, shopping, medication management, and cooking), rates of functional impairment were highest in the areas of Work, Finances, and Medication Management. These group differences could not be accounted for by education, gender, or education. The specific neuropsychological domain which predicted failures on the Financial Management tasks was Abstraction/Executive Functioning, and only Learning uniquely predicted failures on a Shopping task.

To summarize, a number of methods to assess financial management and shopping have been developed and/or adapted for use in the HIV/AIDS population. Declines in these particular aspects of “real world” functioning have been shown to occur in the context of neuropsychological impairment associated with HIV infection. Specifically, executive functions such as planning, organization, and abstract thinking are particularly important for successful management of household finances, and memory skills appear to play an important role in shopping.

Driving Ability

Across a number of research paradigms, including use of self-reported driving histories, driving simulators, and on-the-road driving evaluations (Fitten et al. 1995; Odenheimer et al. 1994; Rebok et al. 1995; Rizzo et al. 1997), neurocognitive deficits have been associated with declines in driving ability. Evidence suggests that as many as 29% of HIV-infected subjects report a decline in driving ability (Marcotte et al. 2000), and there appears to be a higher likelihood of both poorer driving simulator performance and real world driving ability (i.e., being cited for a moving violation or being involved in a motor vehicle accident during the previous year) among HIV+ individuals demonstrating neuropsychological impairment (Marcotte et al. 2000). Importantly, both neuropsychological ability and poor driving simulator performance have been predictive of real world driving difficulties (Marcotte et al. 2001), suggesting that laboratory-based tasks may be useful in detecting real world functional capacity.

The earliest research investigating the effects of neuropsychological impairment upon driving capacity in HIV-infected individuals (Marcotte et al. 1999) revealed that those classified as mildly cognitively impaired failed driving simulations at a rate five to six times greater than cognitively-intact participants, and neuropsychological performances in attention and working memory, fine motor abilities, visuoconstructive abilities, and nonverbal memory, were predictive of various components of driving ability. Marcotte and colleagues (2004) later utilized a comprehensive neuropsychological test battery and two driving simulations (on-the-road driving evaluation, and a task of visual processing and attention: Useful Field of View Test) to assess both navigational abilities and evasive driving. Results indicated that neuropsychologically-impaired HIV+ participants had increased simulator accidents and reduced simulator driving efficiency, failed on-road driving tests at a higher rate, and demonstrated decreased visual processing and divided attention when compared to cognitively-intact HIV+ and control participants. Moreover, global neuropsychological functioning, simulator accidents, and simulator driving efficiency accounted for 47.6% of the variance in on-road driving performance, with executive functioning emerging as the only significant neurocognitive predictor of on-the-road driving failure rates.

A more recent study conducted by Marcotte and colleagues (2006) utilized Useful Field of View performances, neuropsychological status, and detailed self-reported driving history (see Fig. 3). Among the HIV+ sample, 45% evidenced at least mild cognitive impairment compared to only 5% of the control participants. HIV+ participants also demonstrated a far higher rate of abnormal divided attention when compared to control participants (36% vs. 17%, respectively). Not only did poor attention predict self-reported accidents, but 93% of the HIV+ participants who acknowledged prior automobile accidents were correctly classified when poor attention and general neuropsychological impairment were considered simultaneously.

Accidents per Million Miles in Past Year for HIV+ Groups Stratified by Neuropsychological Impairment and Risk Level*. Note: Adapted from Marcotte, T.D., Lazzaretto, D., Scott, J.C., Roberts, E., Woods, S.P., Letendre, S., & the HNRC Group. (2006). Visual attention deficits are associated with driving accidents in cognitively-impaired HIV-infected individuals. Journal of Clinical and Experimental Neuropsychology, 28, 13–28. *Risk Level: Classified based upon Useful Field of View (UFOV) task performance. NP: Neuropsychologically

Taken together, these studies suggest that neurocognitive compromise among HIV-infected adults is strongly associated with impaired driving capacity across a variety of driving paradigms. This body of work extends the well-substantiated findings of reduced cognitive capacity among HIV-infected individuals (e.g., divided attention, visual attention and visual processing, and executive function), and provides support for relationships between these neurocognitive deficits and poor simulator performance, self-reported driving problems, and importantly, real world driving decrements. These results appear to be relevant even for HIV-infected individuals presenting with only mild levels of neurocognitive compromise, suggesting a relatively low cognitive impairment threshold for poor functional ability.

Driving capacity is an especially important concern for older HIV-positive adults due to the additional safety concerns posed by the additive effects of the aging and the HIV disease process. Recent studies have begun to address driving performance among this unique subgroup. Lee et al. (2003a) found that poor simulated driving performance explained over two-thirds of the variability in actual on-road driving in a group of elderly adults (aged 60 and older). Similar findings have been reported in other studies, including those in which specific cognitive abilities were assessed via driving simulation tasks, including working memory (Lee et al. 2003b), visual attention (Lee et al. 2003c), and divided attention (Brouwer et al. 1991).

A recent study conducted by our laboratory (Gooding et al. 2008) revealed that the driving performances of older HIV-infected adults (age > 50) on a route-planning virtual city task were significantly less efficient and slower than for younger HIV-infected adults (age < 40). To explore the basis for this finding, driving simulator performance was regressed on neuropsychological test performance. For the older adults, neuropsychological test scores accounted for 44% of the variance on task completion time and 50% of the variance on efficiency of route. However, for younger adults, neuropsychological test scores accounted for only 1% of the variance on both measures of simulator performance. Both visuospatial abilities and attention independently predicted driving simulator performance on these driving efficiency and speed variables for the older group only. The results of this study suggested that older HIV-infected adults may be at increased risk for functional compromise secondary to HIV-associated neurocognitive decline, and attention and visuospatial abilities constitute the neurocognitive domains maximally predictive of driving simulator performance. Driving is a highly demanding and potentially hazardous daily activity and these findings suggest that older HIV-infected adults demonstrating reduced visuospatial and attentional abilities may be at particular risk for impaired driving ability.

Of the methodologies employed to date to investigate driving capacity in HIV-infected individuals at risk for impaired driving performance, driving simulator tasks and on-the-road driving evaluations appear to align most closely with real world driving ability. Although a specific neuropsychological profile indicative of poor driving has not yet been identified, the aforementioned studies suggest strong relationships between reduced driving performance and neurocognitive decrements across a variety of domains among HIV-infected subjects. Deficits in visual attention, visuospatial ability, memory, fine motor control, and executive function likely contribute most notably to reduced driving performance among HIV-infected individuals, with visuospatial and attentional abilities playing a particularly key role among older HIV-infected adults.

Impact of Neuropsychiatric Factors on Everyday Functioning

The preceding sections have elucidated the contributions of neurocognitive compromise to everyday functional decrements. A related area warranting equal attention is the impact of HIV-associated psychiatric symptomatology upon functional capability, given the high frequency of self-reported psychological difficulties among infected patients. Indeed, previous research has demonstrated that neuropsychiatric features such as apathy, irritability, depression, anxiety, and fatigue are highly prevalent among individuals infected with the HIV virus (Basu et al. 2005; Castellon et al. 1998; Chandra et al. 2005; Rabkin and Chesney 1998; Hinkin et al. 1992; Hinkin et al. 1995; Johnson et al. 1999; Rabkin et al. 1997; Stober et al. 1997), and in fact a large scale HIV investigation (n = 2864) showed that nearly half of the sample suffered from a clinically significant psychiatric disorder (Bing et al. 2001).

Apathy and Irritability

Apathy and irritability appear to be more specific markers of HIV-central nervous system involvement (Castellon et al. 2000) than clinical syndromes such as anxiety or depression, and apathy in particular has been demonstrated to be qualitatively distinct from depression (Harris et al. 1994; Levy et al. 1998; Marin et al. 1994; Pflanz et al. 1991; Starkstein et al. 1992; Castellon et al. 1998; Morriss et al. 1992). Among a variety of illnesses, including HIV-infection, the relationship between apathy and neurocognitive function has been well delineated (Clarke et al. 2008; Levy et al. 1998; Starkstein et al. 1993; Van Reekum et al. 2005) and typically reflects a primarily frontal-subcortical pathogenesis (Castellon et al. 2001; Cole et al. 2007).

In addition to cognitive reductions among apathetic patients with HIV, functional compromise associated with neuropsychiatric distress has been reported. In particular, Starkstein et al. (1993) found that HIV-infected participants identified as apathetic exhibited lower independence in activities of daily living when compared to non-apathetic control participants. Other related studies have explored the relationship between apathy and other neuropsychiatric features affecting daily living skills, and results have shown associations of apathy with aberrant motor behavior (Clarke et al. 2008) as well as disinhibition (Levy et al. 1998); aberrant motor behavior in particular was associated with functional compromise in the apathetic HIV-infected group only. Finally, Castellon and colleagues (personal communication, November 18, 2008) found apathy to be more strongly associated with poor medication adherence than depression among HIV-infected patients.

Depression and Anxiety

Clinical depression appears to be more common among HIV-infected individuals than the general population (Atkinson et al. 198v; Basu et al. 2005; Rabkin 1997; Satz et al. 1997), and symptoms of anxiety are similarly widely reported (Chandra et al. 2005; Basu et al. 2005). However, in contrast to symptoms of apathy and irritability, which are likely attributable to the primary central nervous system effects of the HIV-virus on frontal-subcortical circuits, depression and anxiety constitute a secondary disease process unrelated to neuropsychological abnormalities (Depression: Castellon et al. 1998, Grant et al. 1993; Hinkin et al. 1992; Rabkin et al. 2000; Anxiety: Mapou et al. 1993; Perdices et al. 1992). It is important to recognize that factors including social stigmatization and marginalization, increased medical, legal, and financial stressors, bereavement, and compromised social support may all contribute to elevated rates of mood disturbance (e.g., Castellon et al. 2000; Castellon et al. 2001; Grassi et al. 1999; Perdices et al. 1992), although a bidirectional relationship may exist whereby symptoms of anxiety and depression precipitate poor psychosocial, medical (including medication adherence), and financial adjustment.

Previous work has documented the deleterious impact of depression upon medication adherence among normally aging individuals (Carney et al. 1995) and diverse patient groups, including coronary artery disease, heart transplantation, and sleep disturbance (Carney et al. 1995; Shapiro et al. 1995; Pugh 1983; Edinger et al. 1994; Sensky et al. 1996). Among the HIV-infected populace in particular, depressive symptoms have been related to worsening quality of life (Friedland et al. 1996), poor medication adherence (Singh et al. 1996), and engagement in high risk behaviors (Folkman et al. 1992), while reduced depressive symptomatology appears to be associated with occupational success (i.e., return to work) (van Gorp et al. 2007). A recent study by Paterson and colleagues (2000) reported that among HIV-seropositive subjects, active psychiatric disturbance, especially depression, was a risk factor for suboptimal adherence. This body of literature suggests that while a variety of psychosocial and medical stressors may trigger the emergence of psychiatric symptomatology, mood disturbance may also reduce competency in these functional areas, leading to even greater adaptive decline.

Fatigue

Fatigue reflects an additional neurophysiologic symptom of concern for HIV-infected individuals. Despite a highly variable prevalence (2–54%; Ferrando et al. 1998), the often unexplainable emergence and nonspecific nature of fatigue creates difficulty in investigating potential etiological mechanisms. Research has suggested that fatigue becomes increasingly common, chronic, and functionally significant as the disease advances, and serves as a predictor of physical limitations and disability status, independent of the effects of depressive symptomatology (Ferrando et al. 1998).

Summary

Taken together, the current research on the neuropsychiatric contributions to functional incapacity suggests deleterious effects of depression, anxiety, apathy, irritability, and fatigue upon independent living skills. A variety of diverse domains of functional ability appear to be targeted, and include medication adherence, physical mobility, behavioral risk taking and aberrant motor behavior, and general quality of life. Below we consider the impact of interpersonal moderating factors in the exacerbation or attenuation of functional deficits in HIV-infected individuals.

Interpersonal Contributions to Functional Capacity

In addition to cognitive and neuropsychiatric contributions to functional decline, interpersonal factors also noticeably affect psychosocial and functional capability, with high levels of relational discord or social isolation contributing to greater impairment in psychosocial adaptation and daily living skills, and adequate social support promoting optimal functional capability. The effects of both caregiver burden and social support are therefore of particular relevance to everyday functioning.

Caregiver Burden

In recent years, the face of HIV-associated neurocognitive impairment has begun to change in response to the introduction of HAART, which has led to increased life expectancy (Brew 2004) alongside increased prevalence of HIV-related neurological deficits (Bottiggi et al. 2007). The HIV epidemic has now evolved to a disease increasingly affecting older adults, with the number of AIDS cases in individuals over the age of 50 more than tripling over the last several years (Centers for Disease Control and Prevention 2007). As a result of its newly recognized status as a chronic disease requiring a heightened vigilance to complex treatment regimens related to infection and normal aging processes, the HIV virus poses new challenges as the burden of management shifts to the caregivers of infected individuals. Accordingly, recent work has documented substantial levels of caregiver burden and psychosocial distress (Prachakul and Grant 2003).

More recent investigation considering the quality of the relationship between the patient and caregiver (Miller et al. 2007) has suggested that between 17 and 66 percent of HIV patient-caregiver dyads experience some level of relational discord, and the level of difficulty was shown to be strongly associated with depression, poor physical function, and poor medication adherence for HIV-infected patients, and with level of caregiver burden and depression for caregivers. While the impact of relational discord on the HIV-infected patient is undeniably apparent with regard to physical functioning, daily living skills, and psychiatric symptomatology, greater distress in the caregiver may also lead to indirectly deleterious consequences for the patient, such as greater social isolation and increased disease burden, thus precipitating even greater functional compromise for the infected patient.

Investigation of caregiver burden for HIV-infected children represents an additional line of work concerning functional and psychosocial adaptation. One study investigating stress and coping among inner-city families of ethnically diverse backgrounds found that parents of HIV-infected children reported greater isolation and fewer financial and support resources when compared with uninfected caregivers (Mellins and Ehrhardt 1994). Additionally, uninfected siblings described greater anger and burden from assuming responsibility in caregiving tasks, and all children (including the HIV-infected patient) were more vulnerable to separation and loss. High levels of caregiver burden, therefore, also appear to be strongly associated with psychosocial consequences for the individual affected with the HIV-virus. The experience of burden among caretakers is also likely to lead to withdrawal from the infected patient, thus precipitating inadequate social support that in itself represents an interpersonal factor of relevance to HIV-related neuropsychiatric and functional compromise.

Social Support

Grassi and colleagues (1999) documented an inverse relationship between social support and neuropsychiatric symptoms (including irritability and dysphoria) in an HIV-infected sample, and researchers concluded that social support constitutes an important protective factor against the development of psychiatric symptoms that may otherwise accompany the disease process. Several more recent studies have also supported relationships between higher levels of social support and more direct functional abilities, including psychological adjustment, coping ability, and treatment adherence (Burgoyne 2005; Knowlton et al. 2004; Mizuno et al. 2003). These studies suggest that adequate levels of social support may facilitate both psychological adjustment and management of daily living skills, although this area of study has not yet received extensive consideration. Further work is necessary to examine the mechanisms by which social support may bolster real world capacities, particularly in the face of cognitive deficits that would otherwise impede adjustment.

Conclusion

As HIV infection has evolved from being viewed as a terminal illness to that of a chronic, but not necessarily fatal, illness, so to has there been a concomitant evolution in the “real world” issues that confront the HIV-infected population. Issues such as return to work, the importance of strict adherence to ones medication regimen, and the personal and public safety considerations regarding driving ability are increasingly recognized as key issues for the HIV infected patient and for clinicians and researchers working in this field. The preceding review has attempted to illustrate the complex, multi-factorial nature of these real world behaviors. We have primarily focused on the deleterious impact of neurocogitive dysfunction, particularly compromised memory and dysexecutive disorder, on patients’ ability to discharge these demanding daily activities. It needs be said that cognitive integrity is but one contributor to these behaviors. Physical limitations, psychiatric disorder, and substance abuse can also adversely affect performance, as can (especially in the context of return to work), traditional societal barriers that may impinge upon the HIV infected individual. Further, it is not just the ability to land a job, or to drive safely “most of the time”, or generally take ones medications that is important, but it is also the ability to persist and succeed over time on the job, and to always (and not just usually) drive safely and rigorously adhere to medication regimens. It was the intent of this manuscript not only to summarize what we know about factors predicting real world performance but also to point out what we don’t know and to highlight future directions that this area of research must now address. While challenging, these are questions that at one time in the HIV pandemic seemed difficult to imagine.

References

Albert, S. M., Marder, K., Dooneief, G., Bell, K., Sano, M., Todak, G., et al. (1995). Neuropsychological impairment in early HIV infection: a risk factor for work disability. Archives of Neurology, 52, 525–530.

Albert, S. M., Weber, C. M., Todak, G., Polanco, C., Clouse, R., McElhiney, M., et al. (1999). An observed performance test of medication management ability in HIV: relation to neuropsychological status and medication adherence outcomes. AIDS and Behavior, 3(2), 121–128.

Ances, B. M., & Ellis, R. J. (2007). Dementia and neurocognitive disorders due to HIV-1 infection. Seminars in Neurology, 27(1), 86–92.

Arnsten, J. H., Demas, P. A., Farzadegan, H., Grant, R. W., Gourevitch, M. N., Chang, C. J., et al. (2001). Antiretroviral therapy adherence and viral suppression in HIV-infected drug users: comparison of self-report and electronic monitoring. Clinical Infectious Diseases, 33(8), 1417–1423.

Atkinson, J. H., Grant, I., Kennedy, C. J., Richman, D. D., Spector, S. A., & McCutchan, J. A. (1988). Prevalence of psychiatric disorders among men infected with human immunodeficiency virus: a controlled study. Archives of General Psychiatry, 45(9), 859–864.

Baldeweg, T., Catalan, J., & Gazzard, B. G. (1998). Risk of HIV dementia and opportunistic brain disease in AIDS and zidovudine therapy. Journal of Neurology, Neurosurgery and Psychiatry, 65(1), 34–41.

Bangsberg, D. R. (2006). Monitoring adherence to HIV antiretroviral therapy in routine clinical practice: The past, the present, and the future. AIDS Behavior, 10(3), 249–251.

Barclay, T. R., Hinkin, C. H., Castellon, S. A., Mason, K. I., Reinhard, M. J., Marion, S. D., et al. (2007). Age-associated predictors of medication adherence in HIV-positive adults: health beliefs, self-efficacy, and neurocognitive status. Health Psychology, 26(1), 40–49.

Bartels, A. L., Kortekaas, R., Bart, J., Willemsen, A. T., de Klerk, O. L., de Vries, J. J., et al. (2008). Blood-brain barrier P-glycoprotein function decreases in specific brain regions with aging: A possible role in progressive neurodegeneration. Neurobiology of Aging.

Basu, S., Chwastiak, L. A., & Bruce, R. D. (2005). Clinical management of depression and anxiety in HIV-infected adults. AIDS, 19, 2057–2067.

Becker, J. T., Lopez, O. L., Dew, M. A., Aizenstein, H. J., & Suppl 1. (2004). Prevalence of cognitive disorders differs as a function of age in HIV virus infection. AIDS, 18, S11–18.

Bing, E. G., Burnam, M. A., Longshore, D., Fleishman, J. A., Sherbourne, C. D., London, A. S., et al. (2001). Psychiatric disorders and drug use among human immunodeficiency virus-infected adults in the United States. Archives of General Psychiatry, 58(8), 721–728.

Blalock, A., McDaniel, S., & Farber, E. (2002). Effect of employment on quality of life and psychological functioning in patients with HIV/AIDS. Psychosomatics, 43, 400–404.

Bottiggi, K. A., Chang, J. J., Schmitt, F. A., Avison, M. J., Mootoor, Y., Nath, A., et al. (2007). The HIV dementia scale: predictive power in mild dementia and HAART. Journal of the Neurological Sciences, 260, 11–15.

Brew, B. J. (2004). Evidence for a change in AIDS dementia complex in the era of highly active antiretroviral therapy and the possibility of new forms of AIDS dementia complex. AIDS, 18, S75–S78.

Brooks, R. A., Ortiz, D. J., Veniegas, R., Martin, D. (1999). Employment issues survey: findings from a survey of employment issues affecting persons with HIV/AIDS living in Los Angeles County—final report. Office of the Los Angeles City AIDS Coordinator.

Brouwer, W., Waterink, W., Van Wolffelaar, P., & Rothengatter, T. (1991). Divided attention in experienced young and older drivers: lane tracking and visual analysis in a dynamic driving simulator. Human Factors, 33(5), 573–582.

Brouwers, P., Hendricks, M., Lietzau, J. A., Pluda, J. M., Mitsuya, H., Broder, S., et al. (1997). Effect of combination therapy with zidovudine and didanosine on neuropsychological functioning in patients with symptomatic HIV disease: a comparison of simultaneous and alternating regimens. AIDS, 11(1), 59–66.

Buckner, R. L. (2004). Memory and executive function in aging and AD: multiple factors that cause decline and reserve factors that compensate. Neuron, 44(1), 195–208.

Burgoyne, R. W. (2005). Exploring direction of causation between social support and clinical outcome for HIV-positive adults in the context of highly active antiretroviral therapy. AIDS Care, 17, 111–124.

Carney, R. M., Freedland, K. E., Eisen, S. A., Rich, M. W., & Jaffe, A. S. (1995). Major depression and medication adherence in elderly patients with coronary artery disease. Health Psychology, 14(1), 88–90.

Castellon, S. A., Hinkin, C. H., & Myers, H. (2001). Neuropsychiatric alterations and neurocognitive performance in HIV/AIDS: a response to C. Bungener and R. Jouvent. Journal of the International Neuropsychological Society, 7, 776–777.

Castellon, S. A., Hinkin, C. H., Wood, S., & Yarema, K. T. (1998). Apathy, depression, and cognitive performance in HIV-1 infection. Journal of Neuropsychiatry, 10(3), 320–329.

Castellon, S. A., Myers, H., & Hinkin, C. H. (2000). Neuropsychiatric disturbance is associated with executive dysfunction in HIV-infection. Journal of the International Neuropsychological Society, 6, 336–347.

Catz, S. L., Kelly, J. A., Bogart, L. M., Benotsch, E. G., & McAuliffe, T. L. (2000). Patterns, correlates, and barriers to medication adherence among persons prescribed new treatments for HIV disease. Health Psychology, 19(2), 124–133.

Centers for Disease Control and Prevention. (2007). HIV/AIDS Surveillance Report, 2005. (Vol. 17). Atlanta: U.S. Department of Health and Human Services, Centers for Disease Control and Prevention.

Chandra, P. S., Desai, G., & Ranjan, S. (2005). HIV and psychiatric disorders. The Indian Journal of Medical Research, 121, 451–467.

Ciesla, J. A., & Roberts, J. E. (2001). Meta-analysis of the relationship between HIV infection and risk for depressive disorders. American Journal of Psychiatry, 158(5), 725–730.

Clarke, D. E., van Reekum, R., Simard, M., Streiner, D. L., Conn, D., Cohen, T., et al. (2008). Apathy in dementia: clinical and sociodemographic correlates. The Journal of Neuropsychiatry and Clinical Neurosciences, 20(3), 337–347.

Clerici, M., Seminari, E., Maggiolo, F., Pan, A., Migliorino, M., Trabattoni, D., et al. (2002). Early and late effects of highly active antiretroviral therapy: a 2 year follow-up of antiviral-treated and antiviral-naive chronically HIV-infected patients. AIDS, 16(13), 1767–1773.

Cole, M. A., Castellon, S. A., Perkins, A. C., Ureno, O. S., Robinet, M. B., Reinhard, M. J., et al. (2007). Relationship between psychiatric status and frontal-subcortical systems in HIV-infected individuals. Journal of the International Neuropsychological Society, 13, 549–554.

Deschamps, A. E., Graeve, V. D., van Wijngaerden, E., De Saar, V., Vandamme, A. M., van Vaerenbergh, K., et al. (2004). Prevalence and correlates of nonadherence to antiretroviral therapy in a population of HIV patients using medication event monitoring system. AIDS, Patient Care, and STDS, 18(11), 644–657.

DiClementi, J. D., Ross, M. K., Mallo, C., & Johnson, S. C. (2004). Predictors of successful return to work from HIV-related disability. Journal of HIV/AIDS & Social Services, 3(3), 89–96.

Dray-Spira, R., Gueguen, A., Vespa Study Group, & 2. (2008). Disease severity, self-reported experience of workplace discrimination and employment loss during the course of chronic HIV disease: differences according to gender and education. Occupational and Environmental Medicine, 65, 112–119.

Edinger, J. D., Carwille, S., Miller, P., Hope, V., & Mayti, C. (1994). Psychological status, syndromatic measures, and compliance with nasal CPAP therapy for sleep apnea. Perceptual and Motor Skills, 78, 1116–1118.

Ettenhofer, M. L., Hinkin, C. H., Castellon, S. A., Durvasula, R., Ullman, J., Lam, M., et al. (2009). Aging, neurocognition, and medication adherence in HIV infection. American Journal of Geriatric Psychiatry.

Ferrando, S., Evans, S., Goddin, K., Sewell, M., Fishman, B., & Rabkin, J. (1998). Fatigue in HIV illness: relationship to depression, physical limitations, and disability. Psychosomatic Medicine, 60(6), 759–764.

Ferrier, S. E., & Lavis, J. N. (2003). With health comes work? People living with HIV/AIDS consider returning to work. AIDS CARE, 15(3), 423–435.

Fitten, L. J., Perryman, K. M., Wilkinson, C. J., Little, R. J., Burns, M. M., Pachana, N., et al. (1995). Alzheimer and vascular dementias and driving: A prospective road and laboratory study. JAMA, 273, 1360–1365.

Folkman, S., Chesney, M. A., Pollack, L., & Phillips, C. (1992). Stress, coping, and high-risk sexual behavior. Health Psychology, 11, 218–222.

Friedland, J., Renwick, R., & McColl, M. (1996). Coping and social support as determinants of quality of life in HIV/AIDS. AIDS Care, 8, 15–31.

Gifford, A. L., Bormann, J. E., Shively, M. J., Wright, B. C., Richman, D. D., & Bozzette, S. A. (2000). Predictors of self-reported adherence and plasma HIV concentrations in patients on multidrug antiretroviral regimens. Journal of the Acquired Immune Deficiency Syndrome, 23(5), 386–395.

Gooding, A. L., Foley, J., Jang, J., Castellon, S. A., Sadek, J., Heaton, R. K., et al. (2008). Visuospatial and attentional abilities predict driving simulator performance among older HIV-infected adults [Abstract]. The Clinical Neuropsychologist, 22, 402.

Grant, I., Olshen, R. A., Atkinson, J. H., Heaton, R. K., Nelson, J., McCuthchan, J. A., et al. (1993). Depressed mood does not explain neuropsychological deficits in HIV-infected persons. Neuropsychology, 7, 53–61.

Grassi, L., Righi, R., Makoui, S., Sighinolfi, L., Ferri, S., & Ghinelli, F. (1999). Illness behavior, emotional stress, and psychosocial factors among asymptomatic HIV-infected patients. Psychotherapy and Psychosomatics, 68, 31–38.

Halkitis, P., Palamar, J., & Mukherjee, P. (2008). Analysis of HIV medication adherence in relation to person and treatment characteristics using hierarchical linear modeling. AIDS, Patient Care, and STDS, 22(4), 323–335.

Harris, Y., Gorelick, P. B., Cohen, D., Dollear, W., Forman, H., & Fields, S. (1994). Psychiatric symptoms in dementia associated with stroke: a case–control analysis among predominantly African-American patients. Journal of the National Medical Association, 86, 697–702.

Heaton, R. K., Chelune, G. J., & Lehman, R. A. (1978). Using neuropsychological and personality tests to assess the likelihood of patient employment. The Journal of Nervous and Mental Disease, 166(6), 408–416.

Heaton, R. K., Marcotte, T. D., Mindt, M. R., Sadek, J., Moore, D. J., Bentley, H., et al. (2004). The impact of HIV-associated neuropsychological impairment on everyday functioning. Journal of the International Neuropsychological Society, 10(3), 317–331.

Heaton, R. K., Velin, R. A., McCutchan, A., et al. (1994). Neuropsychological impairment in human immunodeficiency virus-infection: Implications for employment. Psychosomatic Medicine, 56, 8–17.

Hinkin, C. H., van Gorp, W. G., Satz, P., Weisman, J., Thommes, J., & Buckingham, S. (1992). Depressed mood and its relationship to neuropsychological test performance in HIV-1 seropositive individuals. Journal of Clinical and Experimental Neuropsychology, 14, 289–297.

Hinkin, C. H., Van Gorp, W. G., & Satz, P. (1995). Neuropsychological and neuropsychiatric aspects of HIV infection in adults. In H. I. Kaplan & B. J. Sadock (Eds.), Comprehensive textbook of psychiatry/VI (pp. 1669–1680). Baltimore: Williams & Wilkins.