Abstract

Background

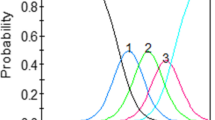

Health outcomes researchers are increasingly applying Item Response Theory (IRT) methods to questionnaire development, evaluation, and refinement efforts.

Objective

To provide a brief overview of IRT, to review some of the critical issues associated with IRT applications, and to demonstrate the basic features of IRT with an example.

Methods

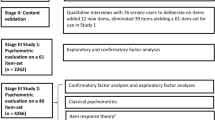

Example data come from 6,504 adolescent respondents in the National Longitudinal Study of Adolescent Health public use data set who completed to the 19-item Feelings Scale for depression. The sample was split into a development and validation sample. Scale items were calibrated in the development sample with the Graded Response Model and the results were used to construct a 10-item short form. The short form was evaluated in the validation sample by examining the correspondence between IRT scores from the short form and the original, and by comparing the proportion of respondents identified as depressed according to the original and short form observed cut scores.

Results

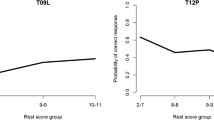

The 19 items varied in their discrimination (slope parameter range: .86–2.66), and item location parameters reflected a considerable range of depression (−.72–3.39). However, the item set is most discriminating at higher levels of depression. In the validation sample IRT scores generated from the short and long forms were correlated at .96 and the average difference in these scores was −.01. In addition, nearly 90% of the sample was classified identically as at risk or not at risk for depression using observed score cut points from the short and long forms.

Conclusions

When used appropriately, IRT can be a powerful tool for questionnaire development, evaluation, and refinement, resulting in precise, valid, and relatively brief instruments that minimize response burden.

Similar content being viewed by others

Notes

In these analyses, items were treated as ordinal and the WLSMV estimator was used resulting in approximated χ2 and df values; thus the difference in the df for these two models (1) does not directly correspond with the difference in the number of estimated parameters (6).

This is similar to the Bonferroni adjustment in that it considers the total number of evaluations, but uses less stringent comparison values for obtaining significance depending on the rank order of the observed p-values. The largest observed p-value has a comparison value of .05, the smallest observed p-value has a comparison value of .05 divided by the number of comparisons, and all other comparison values lie within this range, adjusted according to the rank-order of the magnitude of the observed p-values.

For the purposes of this demonstration, we elected not to conduct more sophisticated analyses for linking observed scores to one another and to IRT scores based on IRT calibrations [68].

References

Reeve, B. B., Hays, R. D., Bjorner, J. B., Cook, K. F., Crane, P. K., Teresi, J. A., Thissen, D., Revicki, D. A., Weiss, D. J., Hambleton, R. K., Liu, H., Gershon, R., Reise, S. P., Lai, J.-S., & Cella, D. Psychometric evaluation and calibration of health-related quality of life item banks: Plans for the patient-reported outcomes measurement information system (PROMIS). Medical Care, in press.

Embretson, S. E. (1996). The new rules of measurement. Psychological Assessment, 8, 341–349.

Hambleton, R. K., & Swaminathan, H. (1985). Item response theory: Principles and applications. Boston: Kluwer-Nijhoff.

Lord, F. M., (1980). Applications of item response theory to practical testing problems. Hillsdale, NJ: Earlbaum.

Wainer, H., Dorans, N. J., Flaugher, R. et al. (1990). Computerized adaptive testing: A primer. Hillsdale NJ: Lawrence Earlbaum Associates.

Abrahamowicz, M., & Ramsay, J. O. (1992). Multicategorical spline model for item response theory. Psychometrika, 57(1), 5–27.

Rossi, N., Wang, X., & Ramsay, J. O. (2002). Nonparametric item response function estimates with the EM algorithm. Journal of Educational and Behavioral Statistics, 27(3), 291–317. .

Reckase, M. D. (1997). The past and future of multidimensional item response theory. Applied Psychological Measurement, 21(1), 25–36.

Masters, G. N. (1982). A Rasch model for partial credit scoring. Psychometrika, 47, 149–174.

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometric Monography, 34.

Samejima, F. (1997). Graded response model. In W. van der Linden & R. K. Hambleton (Eds.), Handbook of modern item response theory (pp. 85–100). New York: Springer.

Thissen, D., & Steinberg, L. (1986). A taxonomy of item response models. Psychometrika, 51(4), 567–577.

Hambleton, R. K., Lipscomb, J., Gotay, C. C., & Snyder, C. (2005). Applications of item response theory to improve health outcomes assessment: Developing item banks, linking instruments, and computer-adaptive testing. In Outcomes assessment in cancer: Measures, methods, and applications (pp. 445–464). Cambridge University Press.

Dorans, N. J. (2007). Linking scores from multiple health outcome instruments. Quality of Life Research, (this issue).

Embretson, S. E., & Reise, S. P. (2000). Item response theory for psychologists. Mahwah, NJ: Lawrence Erlbaum.

Cattell, R. B. (1966). The screen test for the number of factors. Multivariate behavioral Research, 1, 245–267.

Cattell, R. B. (1978). The scientific use of factor analysis. New York: Plenum.

Loehlin, J. C. (1987). Latent variable models. New Jersey: Lawrence Erlbaum Associates.

Holland, P. W., & Wainer, H. (1993). Differential item functioning. Hillsdale, NJ: Lawrence Erlbaum Associates.

Teresi, J., & Fleishman, J. (2007). Assessing measurement equivalence across populations: Differential item functioning (DIF). Quality of Life Research, (this issue).

Chen, W. H., & Thissen, D. (1997). Local dependence indices for item pairs using item response theory. Journal of Educational and Behavioral Statistics, 22, 265–289.

Andrich, D. (1978). A rating formulation for ordered response categories. Psychometrika, 43:561–573.

Andrich, D. (1978). Application of a psychometric rating model to ordered categories, which are scored with successive integers. Applied Psychological Measurement, 2, 581–594.

Muraki, E. (1992). A generalized partial credit model: Application of the EM algorithm. Applied Psychological Measurement, 16, 159–176.

Muraki, E. (1997). A generalized partial credit model. In: van der Linden W & Hambleton RK (eds.), Handbook of modern item response theory (pp. 153–164). New York: Springer.

Bock, R. D. (1972). Estimating item parameters and latent ability when responses are scored in two or more nominal categories. Psychometrika, 37, 29–51.

Rasch, G. (1960). Probabilistic models for some intelligence and attainment tests. Copenhagen: Danmarks Paedagogiske Institut.

Reise, S. P., & Waller, N. G. (2003). How many IRT parameters does it take to model psychopathology items? Psychological Methods, 8(2), 164–184.

Du Toit, M. (2003). IRT from SSI: BILOG-MG, MULTILOG, PARSCALE, TESTFACT. Lincolnwood IL: Scientific Software International.

Ramsay, J. O. (1991). Kernel smoothing approaches to nonparametric item characteristic curve estimation. Psychometrika, 56, 611–630.

Ramsay, J. O. (1995). TestGraf – a program for the graphical analysis of multiple choice test and questionnaire data [computer software]. Montreal: McGill University.

Thissen, D. (1991). MULTILOG user’s guide: Multiple, categorical item analysis and test scoring using item response theory. Chicago: Scientific Software.

Anderson, E. (1973). A goodness of fit test for the rasch model. Psychometrika, 38, 123–140.

Glas, C. A. W. (1988). The derivation of some tests for the Rasch model from the multinomial distribution. Psychometrika, 53(4), 525–546.

Rost, J., & von Davier, M. (1994). A conditional item-fit index for rasch models. Applied Psychological Measurement, 18, 171–182.

Wright, B., & Mead, R. (1977). BICAL: Calibrating items and scales with the Rasch model (Research Memorandum No. 23). Chicago IL: University of Chicago, Department of Education, Statistical Laboratory.

Wright, B., & Panchapakesan, N. (1969). A procedure for sample-free item analysis. Educational and Psychological Measurement, 29, 23–48.

McKinley, R., & Mills, C. (1985). A comparison of several goodness-of-fit statistics. Applied Psychological Measurement, 19, 49–57.

Yen, W. (1981). Using simulation results to choose a latent trait model. Applied Psychological Measurement, 5, 245–262.

Orlando, M., & Thissen, D. (2000). Likelihood-based item-fit indices for dichotomous item response theory models. Applied Psychological Measurement, 24(1), 50–64.

Orlando, M., & Thissen, D. (2003). Further examination of the performance of S-X 2, an item fit index for dichotomous item response theory models. Applied Psychological Measurement, 27(4), 289–298.

Bjorner, J. B., Christensen, K. B., Orlando, M., & Thissen, D. (2005). Testing the fit of item response theory models for patient reported outcomes. Poster presented at the annual meeting of the International Society of Quality of Life Research. San Francisco, CA, October (2005). .

Drasgow, F., Levine, M. V., Tsien, S. et al. (1995). Fitting polytomous item response theory models to multiple-choice tests. Applied Psychological Measurement, 19, 143–165.

Kingston, N., & Dorans, N. (1985). The analysis of item-ability regressions: An exploratory IRT model fit tool. Applied Psychological Measurement, 9, 281–288.

Mislevy, R. J., & Bock, R. D. (1986). Bilog: Item analysis and test scoring with binary logistic models. Mooresville, Indiana: Scientific Software.

Wainer, H., & Mislevy, R. J. (1990). Item response theory, item calibration, and proficiency estimation. In H. Wainer, N. J. Dorans, R. Flaugher et al. (Eds.), Computerized adaptive testing: A primer (pp. 65–101). Hillsdale NJ: Lawrence Earlbaum Associates.

Karabatsos, G. (2003). Comparing the aberrant response detection performance of thirty-six person-fit statistics. Applied Measurement in Education, 16(4), 277–298.

McLeod, L., Lewis, C., & Thissen, D. (2003). A Bayesian method for the detection of item preknowledge in computerized adaptive testing. Applied Psychological Measurement, 27(2), 121–137.

Hendrawan, I., Glas, C. A. W., & Meijer, R. R. (2005). The effect of person misfit on classification decisions. Applied Psychological Measurement, 29(1), 26–44.

Reise, S. P., Widaman, K. F., & Pugh, R. H. (1993). Confirmatory factor analysis and item response theory: Two approaches for exploring measurement invariance. Psychological Bulletin, 114(3), 552–566.

Linacre, J. M. (1994). Sample size and item calibration stability, Rasch Measurement Transactions, 7(4), 328.

Tsutakawa, R. K., & Johnson, J. C. (1990). The effect of uncertainty of item parameter estimation on ability estimates. Psychometrika, 55, 371–390.

Orlando, M., & Marshall, G. N. (2002). Differential item functioning in a Spanish translation of the PTSD checklist: Detection and evaluation of impact. Psychological Assessment, 14(1), 50–59.

Thissen, D., Steinberg, L., & Gerrard, M. (1986). Beyond group-mean differences: The concept of item bias. Psychological Bulletin, 99(1), 118–128.

Thissen, D. (2003). Estimation in multilog. In M. du Toit (Ed.), IRT from SSI: Bilog-MG, multilog, parscale, testfact. Lincolnwood, IL: Scientific Software International.

Bearman, P. S., Jones, J., & Udry, J. R. (1997). http://www.cpc.unc.edu/projects/addhealth/design/html, The National Longitudinal Study of Adolescent Health: Research Design.

Radloff, L. S. (1977). The CES-D scale: A self-report depression scale for research in the general population. Applied Psychological Measurement, 1(3), 385–401.

Goodman, E., & Capitman, J. (2000). Depressive symptoms and cigarette smoking among teens. Pediatrics, 106, 748–755.

McLeod, L. D., Swygert, K. A., & Thissen, D. (2001). Factor analysis for items scored in two categories. In D. Thissen & H. Wainer (Eds.), Test scoring. Mahwah, New Jersey: Lawrence Earlbaum & Associates.

Stout, W. A. (1987). A nonparametric approach for assessing latent trait unidimensionality. Psychometrika, 52, 28.

Muthén, L. K., & Muthén, B. (1998–2004). Mplus user’s guide. Los Angeles, CA: Muthen & Muthen.

Steiger, J. H., & Lind, J. (1980). Statistically based tests for the number of common factors. Paper presented at the Psychometrika Society Meeting, Iowa City.

Bentler, P. M., & Bonett, D. G. (1980). Significance tests and goodness of fit in the analysis of covariance structures. Psychological Bulletin, 88, 588–606.

Bentler, P. M. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107(2), 238–246.

Browne, M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. A. Kollen & J. S. Long (Eds.), Testing structural equation models. Thousand Oaks, CA: Sage.

Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indices in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55.

Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society, 57, 289–300.

Orlando, M., Sherbourne, C. D., & Thissen, D. (2000). Summed-score linking using item response theory: Application to depression measurement. Psychological Assessment, 12(3), 354–359.

Smith, G. T., McCarthy, D. M., & Anderson, K. G. (2000). On the sins of short-form development. Psychological Assessment, 12(1), 102–111.

Reeve, B. B., & Mâsse, L. C. (2004). Item response theory modeling for questionnaire evaluation. In S. Presser, J. M. Rothgeb, M. P. Couper, J. T. Lessler, E. Martin, J. Martin, & E. Sinter (Eds.), Methods for testing and evaluation survey questionnaires (pp. 247–273). Hobeken, NJ: Wiley.

Wilson, M., Allen, D. D., & Li, J. C. (2006). Improving measurement in health education and health behavior research using item response modeling: Comparison with the classical test theory approach. Health Education Research, 21(1), i19–i32.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Edelen, M.O., Reeve, B.B. Applying item response theory (IRT) modeling to questionnaire development, evaluation, and refinement. Qual Life Res 16 (Suppl 1), 5–18 (2007). https://doi.org/10.1007/s11136-007-9198-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-007-9198-0