ABSTRACT

BACKGROUND

The probability of a disease following a diagnostic test depends on the sensitivity and specificity of the test, but also on the prevalence of the disease in the population of interest (or pre-test probability). How physicians use this information is not well known.

OBJECTIVE

To assess whether physicians correctly estimate post-test probability according to various levels of prevalence and explore this skill across respondent groups.

DESIGN

Randomized trial.

PARTICIPANTS

Population-based sample of 1,361 physicians of all clinical specialties.

INTERVENTION

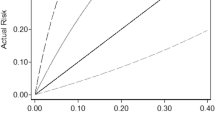

We described a scenario of a highly accurate screening test (sensitivity 99% and specificity 99%) in which we randomly manipulated the prevalence of the disease (1%, 2%, 10%, 25%, 95%, or no information).

MAIN MEASURES

We asked physicians to estimate the probability of disease following a positive test (categorized as <60%, 60–79%, 80–94%, 95–99.9%, and >99.9%). Each answer was correct for a different version of the scenario, and no answer was possible in the “no information” scenario. We estimated the proportion of physicians proficient in assessing post-test probability as the proportion of correct answers beyond the distribution of answers attributable to guessing.

KEY RESULTS

Most respondents in each of the six groups (67%–82%) selected a post-test probability of 95–99.9%, regardless of the prevalence of disease and even when no information on prevalence was provided. This answer was correct only for a prevalence of 25%. We estimated that 9.1% (95% CI 6.0–14.0) of respondents knew how to assess correctly the post-test probability. This proportion did not vary with clinical experience or practice setting.

CONCLUSIONS

Most physicians do not take into account the prevalence of disease when interpreting a positive test result. This may cause unnecessary testing and diagnostic errors.

Similar content being viewed by others

REFERENCES

Phillips B, Westwood M. Testing our understanding of tests. Arch Dis Child. 2009;94(3):178–179.

Ghosh AK, Ghosh K, Erwin PJ. Do medical students and physicians understand probability? QJM. 2004;97(1):53–55.

Reid MC, Lane DA, Feinstein AR. Academic calculations versus clinical judgments: practicing physicians’ use of quantitative measures of test accuracy. Am J Med. 1998;104(4):374–380.

Richardson WS. We should overcome the barriers to evidence-based clinical diagnosis! J Clin Epidemiol. 2007;60(3):217–227.

Lyman GH, Balducci L. The effect of changing disease risk on clinical reasoning. J Gen Intern Med. 1994;9(9):488–495.

Puhan MA, Steurer J, Bachmann LM, ter Riet G. A randomized trial of ways to describe test accuracy: the effect on physicians’ post-test probability estimates. Ann Intern Med. 2005;143(3):184–189.

Sox CM, Doctor JN, Koepsell TD, Christakis DA. The influence of types of decision support on physicians’ decision making. Arch Dis Child. 2009;94(3):185–190.

Steurer J, Fischer JE, Bachmann LM, Koller M, ter Riet G. Communicating accuracy of tests to general practitioners: a controlled study. BMJ. 2002;324(7341):824–826.

Attia JR, Nair BR, Sibbritt DW, et al. Generating pre-test probabilities: a neglected area in clinical decision making. Med J Aust. 2004;180(9):449–454.

Richardson WS. Five uneasy pieces about pre-test probability. J Gen Intern Med. 2002;17(11):882–883.

Hoffrage U, Gigerenzer G. Using natural frequencies to improve diagnostic inferences. Acad Med. 1998;73(5):538–540.

Young JM, Glasziou P, Ward JE. General practitioners’ self ratings of skills in evidence based medicine: validation study. BMJ. 2002;324(7343):950–951.

Mosteller F, Tukey J. Data analysis and regression, a second course in statistics: Addison-Wesley publishing company; 1977.

Efron B, Tibshirani R. An introduction to the Bootstrap: Chapman & Hall; 1993.

R Development Core Team. R: A language and environment for statistical computing. http://www.R-project.org (Accessed on 3 October 2010). R Foundation for Statistical Computing, Vienna, Austria. ISBN 3-900051-07-0. 2008.

Schwartz WB, Gorry GA, Kassirer JP, Essig A. Decision analysis and clinical judgment. Am J Med. 1973;55(3):459–472.

Bianchi MT, Alexander BM. Evidence based diagnosis: does the language reflect the theory? BMJ. 2006;333(7565):442–445.

Kurzenhauser S, Hoffrage U. Teaching Bayesian reasoning: an evaluation of a classroom tutorial for medical students. Med Teach. 2002;24(5):516–521.

Fagan TJ. Letter: nomogram for Bayes’ theorem. N Engl J Med. 1975;293(5):257.

Noguchi Y, Matsui K, Imura H, Kiyota M, Fukui T. A traditionally administered short course failed to improve medical students’ diagnostic performance. A quantitative evaluation diagnostic thinking. J Gen Intern Med. 2004;19(5 Pt 1):427–432.

Gill CJ, Sabin L, Schmid CH. Why clinicians are natural Bayesians. BMJ. 2005;330(7499):1080–1083.

Kahneman D, Slovic P, Tversky A, eds. Judgment under uncertainty: heuristics and biases. Cambridge, UK: Cambridge University Press; 1982.

Graber M, Gordon R, Franklin N. Reducing diagnostic errors in medicine: what’s the goal? Acad Med. 2002;77(10):981–992.

Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121(5 Suppl):S2–23.

Norman GR, Eva KW. Diagnostic error and clinical reasoning. Med Educ. Jan;44(1):94-100.

Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78(8):775–780.

Kassirer JP, Kopelman RI. Cognitive errors in diagnosis: instantiation, classification, and consequences. Am J Med. 1989;86(4):433–441.

Grijalva CG, Poehling KA, Edwards KM, et al. Accuracy and interpretation of rapid influenza tests in children. Pediatrics. 2007;119(1):e6–11.

Veloski J, Tai S, Evans AS, Nash DB. Clinical vignette-based surveys: a tool for assessing physician practice variation. Am J Med Qual. 2005;20(3):151–157.

Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol. 1997;50(10):1129–1136.

Whiting PF, Sterne JA, Westwood ME, et al. Graphical presentation of diagnostic information. BMC Med Res Methodol. 2008;8:20.

Van den Ende J, Bisoffi Z, Van Puymbroek H, et al. Bridging the gap between clinical practice and diagnostic clinical epidemiology: pilot experiences with a didactic model based on a logarithmic scale. J Eval Clin Pract. 2007;13(3):374–380.

Acknowledgments

The authors thank Véronique Kolly, Research Nurse at the University Hospitals of Geneva, for her help and guidance in conducting the survey.

Funding

The survey was funded by The Research and Development Program of University Hospitals of Geneva. There was no external funding.

Presentations

Part of this paper was presented as an abstract and poster at the 78th Annual Meeting of the Swiss Society of Internal Medicine, Basel, Switzerland, in May 2010

Conflicts of Interest

None Disclosed.

Author information

Authors and Affiliations

Corresponding author

Electronic Supplementary Materials

Below is the link to the electronic supplementary material.

ESM 1

(DOC 28 kb)

Rights and permissions

About this article

Cite this article

Agoritsas, T., Courvoisier, D.S., Combescure, C. et al. Does Prevalence Matter to Physicians in Estimating Post-test Probability of Disease? A Randomized Trial. J GEN INTERN MED 26, 373–378 (2011). https://doi.org/10.1007/s11606-010-1540-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-010-1540-5