Abstract

Resurgence is the recurrence of a previously reinforced and then extinguished behavior induced by the extinction of another more recently reinforced behavior. Resurgence provides insight into behavioral processes relevant to treatment relapse of a range of problem behaviors. Resurgence is typically studied across three phases: (1) reinforcement of a target response, (2) extinction of the target and concurrent reinforcement of an alternative response, and (3) extinction of the alternative response, resulting in the recurrence of target responding. Because each phase typically occurs successively and spans multiple sessions, extended time frames separate the training and resurgence of target responding. This study assessed resurgence more dynamically and throughout ongoing training in 6 pigeons. Baseline entailed 50-s trials of a free-operant psychophysical procedure, resembling Phases 1 and 2 of typical resurgence procedures. During the first 25 s, we reinforced target (left-key) responding but not alternative (right-key) responding; contingencies reversed during the second 25 s. Target and alternative responding followed the baseline reinforcement contingencies, with alternative responding replacing target responding across the 50 s. We observed resurgence of target responding during signaled and unsignaled probes that extended trial durations an additional 100 s in extinction. Furthermore, resurgence was greater and/or sooner when probes were signaled, suggesting an important role of discriminating transitions to extinction in resurgence. The data were well described by an extension of a stimulus-control model of discrimination that assumes resurgence is the result of generalization of obtained reinforcers across space and time. Therefore, the present findings introduce novel methods and quantitative analyses for assessing behavioral processes underlying resurgence.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

The recurrence of previously reinforced behavior after extinction suggests that extinction does not reverse the original learning of that behavior. The primary evidence that the original learning is retained comes from a large collection of literature showing that behavior returns after extinction (see Bouton, Winterbauer, & Todd, 2012, for a review). A variety of methods have been used to reveal the return of extinguished operant behavior in species ranging from fish, rats, and pigeons, to typical and developmentally delayed humans (see Bouton & King, 1983; da Silva, Cançado, & Lattal, 2014; Epstein, 1985; Kuroda, Cançado, & Podlesnik, 2016; Podlesnik & Shahan, 2009, 2010; Spradlin, Fixsen, & Girarbeau, 1969; Winterbauer & Bouton, 2010). Moreover, each method has been used to represent an environmental condition influencing the likelihood of treatment relapse in clinical situations. Therefore, findings from basic research using animal models are useful for understanding how fundamental behavioral processes underlie relapse of problem behavior, ranging from substance abuse to self-injury (see Mace & Critchfield, 2010; Pritchard, Hoerger, Mace, Penney, & Harris, 2014).

Resurgence is a laboratory model of treatment relapse, in which a previously extinguished target behavior recurs when an alternative behavior is extinguished (e.g., Leitenberg, Rawson, & Mulick, 1975; see Lattal & St. Peter Pipkin, 2009; Shahan & Sweeney, 2011, for reviews). Typically, these procedures employ a three-phase experimental design, where phases are conducted sequentially, with each phase spanning multiple sessions (e.g., Lieving & Lattal, 2003; Podlesnik, Jimenez-Gomez, & Shahan, 2006; Winterbauer & Bouton, 2010). For example, Podlesnik and Kelley (2014) arranged a variable-interval (VI) 60-s schedule of food reinforcement for target responding with pigeons in Phase 1. In Phase 2, they extinguished target responding while introducing and reinforcing a concurrently available alternative response according to a VI 60-s schedule. In Phase 3, extinguishing alternative responding produced a transient but reliable increase in target responding, despite target responding remaining under extinction. The resurgence of target responding suggests that clinical interventions eliminating problem behavior by reinforcing prosocial alternative responses (e.g., Higgins, Heil, & Sigmon, 2013; Petscher, Rey, & Bailey, 2009) could result in a resurgence of problem behavior if reinforcement for the alternative response becomes compromised (see Volkert, Lerman, Call, & Trosclair-Lasserre, 2009). For this reason, understanding the behavioral processes underlying resurgence can provide insight into clinical strategies for reducing relapse of problem behavior (see Nevin & Wacker, 2013; Podlesnik & DeLeon, 2015; Pritchard et al., 2014; Wacker, Harding, Berg, Lee, Schieltz, Padilla, Nevin, & Shahan, 2011, for relevant discussions).

The three phases of typical resurgence procedures have provided a laboratory model for assessing how extended conditions of differential reinforcement of an alternative response can come to induce the recurrence of the previously reinforced target response. Specifically, resurgence of target responding typically occurs in the third phase, following the training and elimination of target responding across the two prior phases. However, typical resurgence procedures only assess behavior across extended time frames, with each phase spanning multiple sessions. Other methods offer the possibility to study resurgence in more dynamic environments.

This study introduced a novel method for studying resurgence using a modified version of the free-operant psychophysical procedure (FOPP; see top panel of Fig. 1). The FOPP was developed to study temporal discrimination processes by assessing how responding transitions from one alternative to another when reinforcement contingencies repeatedly reverse between those alternatives at a specified time (e.g., Bizo & White, 1994; da Silva & Lattal, 2006; Stubbs, 1980). Bizo and White (1994) arranged 50-s trials with pigeons pecking for food reinforcement across two concurrently available keys. During the first half of each trial, responding on the left key was intermittently reinforced according to a VI schedule while responding on the right key was not reinforced (effectively, a concurrent VI vs. extinction schedule). At 25 s, the contingencies reversed so that responding to the left key was no longer reinforced, but responding on the right key was reinforced according to a separate VI schedule (a concurrent extinction vs. VI schedule). Therefore, the contingency change between keys was signaled only by the passage of time since trial onset. The FOPP is used to assess timing by examining moment-to-moment responding across the 50-s trials and when responding switches from the left to the right key. Bizo and White found that the proportion of responding on the right key followed a sigmoidal shape, with switches from the left to right key occurring at approximately the time at which reinforcement switched. Thus, the pigeons effectively timed the reversal of reinforcement contingencies between the keys. We repurposed the FOPP to assess resurgence by extending the duration of trials and arranging extinction throughout those trials.

Procedure for trials with reinforcement (top panel) and probe trials (bottom panel). Keys were darkened during inter-trial intervals and illuminated green throughout trials. ‘+’ indicates the operation of a VI 30-s reinforcement schedule and ‘-’ represents extinction. During the first half of trials with reinforcement (labeled Phase 1 to link with labels from typical resurgence procedures), target (left-key) responding was reinforced and alternative (right key) responding was not. During the second half of trials with reinforcement (Phase 2), the contingencies were reversed. The bold arrow indicates the 50-s mark, at which point both keys remained green in unsignaled probes, but changed from green to red for 5 s in signaled probes

The FOPP shares a number of similarities with the typical resurgence procedure (e.g., Podlesnik & Kelley, 2014). Both procedures initially arrange reinforcement for one response, as in Phase 1 during resurgence procedures and the first half of the trial during the FOPP. Next, both procedures extinguish the first response and reinforce a second response, as in Phase 2 during resurgence procedures and the second half of the trial during the FOPP. These procedural similarities suggest the FOPP arranges conditions from which resurgence of target responding could be assessed upon extinguishing alternative responding, but under more dynamic conditions. To assess resurgence in the FOPP, our study arranged probe trials in which the duration of FOPP trials was extended beyond the typical 50 s (see bottom panel of Fig. 1). Unlike baseline (reinforced) FOPP trials, we arranged extinction for both responses throughout the entirety of these extended probe trials. If extending the FOPP trial in extinction beyond 50 s resembles the extinction of the alternative response during Phase 3 of resurgence procedures, target responding should recur after 50 s during these extended probe trials. In addition, we assessed brief stimulus changes to both keylights at the 50-s mark during half of the probe trials. Consistent with previous studies signaling the offset of reinforcement for alternative responding with changes in keylight stimuli (e.g., Kincaid, Lattal, & Spence, 2015; Podlesnik & Kelley, 2014), we predicted these signaled probe trials should increase the discriminability of extinction of alternative responding and, thereby, increase the immediacy and/or size of resurgence.

Furthermore, we assessed the recurrence of target responding using a quantitative model of temporal discrimination to assess the extent to which stimulus control by obtained reinforcers plays a role in resurgence. Davison and Nevin (1999) suggested that control by stimuli, responses, and reinforcers depends jointly on the discriminated relation between (1) stimuli and reinforcers, and (2) responses and reinforcers. Responding under time-based contingencies similar to the FOPP has been successfully modeled quantitatively by assuming that time elapsed since any event functions as a discriminative stimulus for the probability of a reinforcer for a given response (e.g., Cowie, Davison, Blumhardt, & Elliffe, 2016; Cowie, Davison, & Elliffe, 2014, 2016; Cowie, Elliffe, & Davison, 2013; Davison, Cowie, & Elliffe, 2013). This stimulus-control approach to understanding contingencies among events suggests that time and other exteroceptive stimuli exert the same sort of control over responding (see also Cowie & Davison, 2016). If resurgence of a previously extinguished response is affected by the similarity between the current context and a context in which responding was previously reinforced (e.g., Bouton et al., 2012), then resurgence in the FOPP may be described by the same stimulus-control approach used to describe responding in standard temporal-discrimination procedures. This study thus aimed to assess the extent to which responding during signaled and unsignaled probe trials could be described in terms of a stimulus-control approach and, by extension, the degree to which resurgence depends on control by stimuli that signal the response likely to produce a reinforcer.

Method

Subjects

The subjects were six experimentally naïve pigeons, numbered 161 to 166, maintained at 85 % ±15 g of their free-feeding weights by postsession supplementary feeding of mixed grain. Water and grit were freely available at all times. Pigeons were housed in a colony room with a shifted light–dark cycle, with lights turned off at 4 p.m. and on at midnight. Experimental sessions ran at 1 a.m., 7 days a week. No personnel entered the room during sessions.

Apparatus

The pigeons’ home cages also served as experimental chambers. The chambers measured 380 mm high × 380 mm wide × 380 mm deep. Three walls were constructed from sheet metal and the floor, ceiling, and front wall were steel bars. Three translucent response keys were mounted on the right wall, 300 mm above the floor and 120 mm apart, center to center. Only the two side keys were used, and both could be transilluminated green and red. Pecks exceeding 0.1 N of force to lit keys were recorded as responses. A hopper filled with wheat sat behind a magazine aperture, located 100 mm below the response keys and measuring 50 mm high × 50 mm wide × 50 deep. During reinforcement, the magazine was illuminated, and the hopper was raised and accessible for 2 s. All experimental events were programmed and recorded by a computer running MED PC in an adjacent room.

Procedure

Pretraining.

After autoshaping (Brown & Jenkins, 1968), the pigeons were trained to peck the side keys when lit green using a discrete-trial procedure similar to that of da Silva and Lattal (2006). Each trial consisted of a single reinforcement schedule running on one of the two side keys and ended after a reinforcer had been delivered. Left- and right-key trials alternated with an equal probability, and trials were separated by a 10-s intertrial interval (ITI), during which both keys were darkened. During the first of three pretraining sessions, the reinforcement schedule during each trial doubled progressively from fixed-ratio (FR) 1 to FR 8, and was then capped at FR 10. The schedule incremented after 7 + n reinforcers were obtained at that ratio, where n is the number of previous increments. During the second session, the reinforcement schedule was increased progressively from VI 10 s to VI 30 s in 4-s increments. The schedule value for each key was increased after 1 + n reinforcers were obtained at that value, where n is the number of previous increments. Finally, in the third pretraining session, both keys were lit green, and the pigeons responded to a concurrent VI 30-s, VI 30-s schedule, where successive reinforcers alternated between left and right keys.

Baseline

The top panel of Fig. 1 shows a reinforced FOPP trial. Both side keys were darkened during the 10-s ITIs and illuminated green during the 50-s trials. Thus, the illumination of the keylights at trial onset may function as a time marker (to be discussed below). During the first 25-s half of trials with reinforcement (labeled Phase 1 to link with labels from typical resurgence procedures), left-key (hereafter target) responding was reinforced on a VI 30-s schedule, and right-key (hereafter alternative) responding was not reinforced. During the second 25-s half (labeled Phase 2), the contingencies reverse so that target responding was no longer reinforced and alternative responding was reinforced on an independent VI 30-s schedule. Left and right keylights were not counterbalanced as the pigeons were all experimentally naïve. The VI schedules were sampled without replacement from lists of 13 intervals (Flesher & Hoffman, 1962). Consistent with previous FOPP procedures (e.g., Bizo & White, 1994), interval timers only ran during the appropriate half, and reinforcers arranged but not obtained were held until the next reinforced trial. Hopper times were not subtracted from the trial timer to maintain constant trial durations (see also Bizo & White, 1994).

Baseline was conducted for 35 sessions. Sessions began with a 10-s ITI. A total of sixty 50-s FOPP trials, divided into 44 trials with reinforcement and 16 extinction trials, were randomly dispersed within each baseline session. Extinction trials were identical to trials with reinforcement except that both keys arranged extinction for the entire 50-s duration of the trial. Analysis of baseline responding was taken from these extinction trials to exclude the local effects of reinforcement (see also Bizo & White, 1994; Chiang, Al-Ruwaitea, Ho, Bradshaw, & Szabadi, 1998; Cowie, Bizo, & White, 2016).

Probes

After baseline, extended probe trials were introduced to assess resurgence of target responding. The bottom panel of Fig. 1 shows the stimulus arrangement during probe trials. Each probe trial was 150 s in duration, and both keys arranged extinction throughout. During some probes trials (hereafter unsignaled probes), both keys were illuminated green throughout the 150-s duration of the trial. In other probe trials (hereafter signaled probes), both keys were temporarily illuminated red for 5 s at the 50-s mark (see arrow in Fig. 1), before reverting to green for the remainder of the probe trial.

We conducted probes for 23 consecutive sessions following baseline. During each session, eight probe trials replaced the 16 programmed extinction trials from baseline sessions. Fewer probe trials than extinction trials were run to minimize the difference in session duration. Thus, each probe session consisted of 44 trials with reinforcement and eight probe trials (four unsignaled and four signaled). The probe trials were randomly distributed within each probe session, with the constraints that probe trials occurred only after 11 trials with reinforcement from the start of the session and were not presented successively.

Data analyses

The total number of target and alternative responses and obtained reinforcers were aggregated in 1-s time bins. Local rates of responses and reinforcement were calculated for each 1-s time bin by dividing the number of responses in each bin by the number of trials from which responses were aggregated, and then multiplying by 60 to give the response or reinforcement rate per minute (see Cowie, Elliffe, & Davison, 2013). In addition, response rates during probe trials were further transformed by dividing each data point after 50 s by the maximum (peak) response rate in the initial 50 s. This proportion of peak measure normalizes the data, so that relative increases after 50 s can be directly compared across unsignaled and signaled probes.

Results

Baseline

The total number of responses was aggregated in 1-s time bins from the arranged extinction trials in the last 20 sessions of baseline. The left panel of Fig. 2 shows responding during baseline conditions by plotting the proportion of alternative-key responses in each 1-s time bin since trial onset. Alternative responding remained low during the first half of the trials and increased in a sigmoidal function, surpassing target responding prior to the 25-s half for all pigeons, with the exception of 164, which shifted slightly after 25 s.

The right panel of Fig. 2 shows the obtained reinforcer rates from trials with reinforcers pooled in 1-s time bins. Local reinforcer rates were pooled from trials with reinforcers and calculated by dividing the total number of reinforcers in each time bin by the number of trials from which the reinforcers were aggregated, and then multiplying by 60 to give reinforcers per min. For all pigeons, there was a brief increase in obtained reinforcers for target responding during the first and/or second time bin. Unobtained reinforcers set up near the end of the first half of the trial were obtained with the first target response of the following trial. This likely shifted responding to the target key during the initial seconds of the trial in the five out of six pigeons noted above (see Bizo & White, 1995; Cowie, Bizo, & White, 2016). The gradually decreasing trend in obtained reinforcers for target responding across the first 25 s resulting from increases in alternative responding also potentially contributed to the leftward shift in responding.

Probes

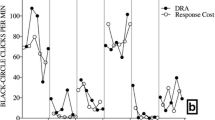

The left and center panels of Fig. 3 show the rate of target and alternative responding in the unsignaled and signaled probes, respectively, expressed as a function of time since trial onset. Responding in the initial 50 s was similar between unsignaled and signaled probes and followed the same pattern as baseline (see Fig. 2)—target responding decreased and alternative responding increased as a function of time. After the initial 50 s during signaled probes, alternative responding sharply decreased at the onset of the signal and remained low during the 5-s signal, with the exception of Pigeon 162. During unsignaled probes, alternative responding remained elevated for all pigeons.

Target (Tar) and alternative (Alt) response rates during unsignaled (left panel) and signaled (center panel) probes. Vertical lines indicate the 25-s and 50-s marks for both types of probes, as well as the offset of the signal in signaled probes. Note the different y-axis scales for individual subjects. The right panel re-presents target responding after the initial 50 s as proportions of peak target responding, in both unsignaled (Unsig; gray function) and signaled (Sig; black function) probes

For all pigeons, target responding resurged after 50 s to some degree. In both types of probe trials, target responding tended to show several points of inflection, with increases shortly after 50 s, followed by a decrease, and often a second increase and decrease around 125 s. A clear exception was Pigeon 164, during signaled probes. In addition, alternative responding generally complemented the inflections in target responding in a cyclical pattern.

The right panel of Fig. 3 directly compares responding across unsignaled (gray function) and signaled probes (black function). Peak target responding tended to occur sooner and/or to a greater extent in signaled probes for all pigeons, with effects being smallest for 162 and 164.

Modeling local-level resurgence

The cyclical changes in response rates across time in baseline and probe trials suggests that responding was strongly under control of the likely availability of a reinforcer at each given time, as signaled by time since the most recent keylight illumination. The cyclical pattern in probe trials after 50 s suggests that the keylight illumination and darkening associated with the beginning and end of a trial facilitated, but were not necessary for, discrimination of the likely end of one trial and start of the next. In the absence of such a stimulus change, responding appeared to come under control of the contingencies that would usually be in effect once 50 s had elapsed since the beginning of the previous trial—that is, by the previously experienced reinforcer probabilities at the beginning of trials. Given the temporal regularity of events in baseline, this division of stimulus control between time since the previous trial onset and the keylight illumination is unsurprising.

We therefore assessed whether a stimulus-control model of temporal-discrimination performance (Cowie, Davison, & Elliffe, 2014, 2016; Cowie, Elliffe, & Davison, 2013; Davison, Cowie, & Elliffe, 2013) could be extended to describe local response rates in the extinction probe trials. The stimulus-control model assumes that the decision to respond depends on the discriminated availability of reinforcers for a response in a given stimulus context (Cowie & Davison, 2016; Davison & Nevin, 1999). Under time-based contingencies such as those arranged in this experiment, time since the illumination of the keylights at the beginning of a trial (henceforth referred to as trial onset) is the stimulus context. The keylight illumination at trial onset functions as a marker event signaling the start of a period of time during which target responses will be reinforced. In the extended parts of probe trials, time elapsed since trial onset signals the likely start of a subsequent trial, because in baseline, new trials typically begin at this time. Hence, behavior in the extended part of a probe trial comes under control by contingencies similar to those in a baseline trial.

Modeling performance from a stimulus-control approach to understanding changes in behavior across time since a marker event (here, the keylight illumination at trial onset) assumes that the discriminated availability of reinforement differs from the obtained availability—this difference results from two sources of error. The first source of error relates to discriminating the response that produced a reinforcer (the response–reinforcer relation), which will occasionally cause reinforcers obtained for one response to be attributed to the other response (see Davison & Jenkins, 1985). This attribution of a reinforcer to a response that did not produce it—termed misallocation—may be modeled by assuming that some proportion of the reinforcers obtained for one response at a given time is incorrectly discriminated to have been obtained from the other response:

In Equation 1, the misallocation parameter m may take a value between 0 (no misallocation; perfect discrimination of the response–reinforcer relation) and .5 (total misallocation; no discrimination of the response–reinforcer relation). Subscript t indicates time elapsed since the marker event; although elapsed time is a continuously changing stimulus, for convenience it can be divided into bins. In this experiment, we used 1-s bins.

The second source of error relates to discriminating the stimulus in the presence of which the reinforcer was obtained (the stimulus–reinforcer relation)—in this case, the stimulus is time since trial onset. This source of error would result in reinforcers obtained at one time being discriminated to have been obtained at a different time. Given that time may be both underestimated and overestimated (e.g., Gibbon, 1977), such error may be modeled by redistributing the reinforcers obtained in each time bin t across surrounding times, with a mean t and standard deviation proportional to the mean. The coefficient of variation (γ) relates the standard deviation to the mean, and may therefore be considered an index of discrimination. A larger coefficient of variation denotes relatively greater error in discriminating the stimulus–reinforcer relation, and hence a relatively greater effect of reinforcers obtained at one time on the discriminated availability of reinforcers at surrounding times. The approach assumes that response rates at a given time are a function of the sum of the reinforcers discriminated to have been obtained for that response, at that time, following misallocation and redistribution. Hence, for response rates in baseline trials, the stimulus-control approach predicts:

Here, the subscript i denotes the response (in this experiment, target, or alternative), and the subscript t denotes the time since trial onset. The subscript n denotes the time bin—effectively, time since trial onset. R i,n ’ is the number of reinforcers (R) obtained for response i, after misallocation, in the nth time bin. The parameter γ is the coefficient of variation, which gives a measure of the spread of estimates of elapsed time, and hence of the precision of temporal discrimination. The parameter A i is a multiplier, which scales reinforcer rates to response rates (see Davison et al., 2013). Thus, in Equation 2, reinforcers obtained for response i, in time bin n, are redistributed across time bins longer and shorter than n, according to a normal distribution with mean n, and standard deviation equal to γ × n. The sum of the redistributed reinforcers in each time bin n is then multiplied by the constant A i to give the predicted rate of responding in that time bin.

In probe trials, at times later than 50 s, response rates would be controlled by the contingencies associated with the beginning of a trial, to the degree that (1) the animal discriminates that sufficient time has elapsed for the current trial to end and a new one to start, and (2) the current stimulus context is similar to the stimulus context usually associated with baseline trials. This was modeled by transposing misallocated reinforcers obtained at times in a baseline trial to times greater than 50 s in a probe trial, then redistributing those reinforcers across surrounding times:

Equation 3 is similar to Equation 2 in that it predicts response rate on the basis of the redistribution of reinforcers across surrounding times. Equation 3 differs from Equation 2 because it describes the discriminated contingencies at times later than 50 s in a probe trial. Hence, while Equation 2 reflects the redistribution of reinforcers across times similar to the one at which they were obtained, Equation 3 effectively transposes reinforcers obtained in earlier time bins (R’ I,n - 50 - D ) to times after 50 s, then redistributes these reinforcers across surrounding times later than 50 s. The parameter D represents the time taken to discriminate that a duration greater than the average trial has elapsed since the last-observed keylight illumination. The parameter G represents the extent to which the contingencies from time bin n - 50 - D in a baseline trial generalize to the current stimulus context n + D s. Hence, Equation 3 predicts that when time elapsed since the most recent trial onset is discriminated to be longer than the typical trial duration in time bin n + 50 + D, a new trial is discriminated to have started, and responding comes under some degree of control of the reinforcers that are usually obtained at earlier times in a trial.

The parameter D, time taken to discriminate that the time elapsed since trial onset is greater than the duration of a standard trial, thus represents the point at which a new trial is discriminated to have begun during probe trials. The value of D is affected by discrimination of elapsed time. Hence, D would be smaller in signaled probes than in unsignaled probes, because the keylight color change at 50 s acts as a time marker, signaling the end of a standard trial duration. The parameter G scales the effect of discriminated reinforcers in these later time bins, so that their effect on the response rate is larger when the context appears more similar to that in a standard trial. The parameter G would be expected to be smaller in signaled probes than in unsignaled ones, because of the addition of a keylight color change is never present at the beginning of a baseline trial. Furthermore, reinforcers for target responding are obtained in closer proximity to a specific time marker, the illumination of the keylights at the beginning of a trial, than are reinforcers for alternative behavior. Hence, G should also be smaller for contingencies associated with target responding than for contingencies associated with alternative responding.

To model the discriminated contingencies in probe trials, obtained reinforcers may be redistributed to times greater than 50 s, because error in discriminating elapsed time means time since trial onset will sometimes be discriminated as being longer than 50 s. However, “transposed” reinforcers cannot be distributed to times earlier than 50 + D s (see Cowie, Davison, & Elliffe, 2014, for a discussion of control by multiple stimuli).

In Equation 3, the coefficient of variation (γ) may be the same for redistributing both obtained and transposed reinforcers, implying that temporal discrimination is as accurate before as it is after 50 s (i.e., γ1 = γ2). Alternatively, to reflect weaker control in the absence of the exteroceptive marker event usually associated with the start of a trial (the illumination of the keylights), a separate coefficient of variation may be used for transposed reinforcers (i.e., γ1 ≠ γ2). We tested both versions of the model, and term the former version the single-γ version, and the latter the dual-γ version.

We fitted both the single-γ and dual-γ versions of Equation 3 to data from unsignaled and signaled probes. Because probes were presented relatively infrequently, individual-subject response rates were calculated from few data. Fits to such data would yield inaccurate parameter estimates. Hence, the two versions of Equation 3 were fitted to response rates averaged across all six pigeons. The obtained response rates, and those predicted by each version of Equation 3, are plotted in Fig. 4 as a function of time since trial onset. Table 1 shows the parameter values obtained from these fits. To provide a quantitative index of the ability of the single-γ and dual-γ models to account for variance in the data, Akaike’s information criterion (AIC; Akaike, 1973) was calculated (see Table 1). A difference of at least 6 in AIC values for two models indicates strong support for the model with the smaller AIC value, and a difference of at least 10 indicates almost no support for the model with the larger AIC value (Akaike, 1973; Burnham & Anderson, 1992; Navakatikyan, 2007; Navakatikyan, Murrell, Bensemann, Davison, & Elliffe, 2013).

Table 1 shows that both the single-γ and dual-γ versions of the model generally provided an adequate description of response rates on each alternative, accounting for between 78 and 81 % of data variance. Figure 4 shows that each version of the model predicted the cyclical pattern of change in response rate observed at later times in both the signaled and unsignaled probes. Indeed, parameters obtained from both models (see Table 1) showed similar patterns. Misallocation (m) was generally small, as might be expected in a procedure arranging two different location-based responses. The coefficient of variation (γ) was larger, denoting weaker discrimination, in unsignaled than in signaled probes—such a difference may relate to the keylight color change at 50 s in signaled probes, which functions as an additional (albeit different) time marker in the signaled probes. Indeed, the parameter denoting the time taken to discriminate that a new trial may have started (D) was larger in unsignaled than in signaled probes. The stimulus–reinforcer relation generalized to a greater extent for reinforcers produced by alternative responses (G A ) than for reinforcers produced by target responses (G T ), as might be expected given the absence of the keylight color change associated with the beginning of a trial, 60 s after the beginning of a probe trial (50-s trial plus 10-s ITI). The ability of both versions of the model to account for the data suggests that resurgence may be controlled by similarity between the current stimulus context (here, elapsed time) and previous contexts in which reinforcers were obtained for responding (i.e., times within a standard trial)—that is, this model suggests that resurgence is a result of generalization across stimulus contexts.

Although both the single-γ and dual-γ models provided an adequate description of the data, comparison of the AIC values (see Table 1) shows that the dual-γ model provided a better description of response rates than did the single-γ model. Indeed, the difference in AIC values was greater than 10 units for fits to data from both types of probe trial, suggesting almost no support for the single-γ version of the model. However, a somewhat counterintuitive result from fits of the dual-γ model is that the coefficient of variation for redistribution across times later than t + 50 + D (γ2) was always smaller than that for times earlier than 50 s (γ1), implying better temporal discrimination at these later times.

The stimulus-control approach to describing the general pattern of response rates in these experiments adequately accounted for the data. Nevertheless, some shortcomings of the model must be noted. Both versions of the model overestimated the rate of target responding at the beginning of a trial, and the rate of alternative responding at its maxima. In unsignaled probes, both versions of the model systematically underestimated the rate of target responding that occurs at the end of a trial. Furthermore, while both versions of the model predict the abrupt increase in responding that occurs at the offset of the stimulus in signaled probes, they predict a more gradual decline in alternative response rates than was obtained. Thus, the models described here offer a starting point for a quantitative account of how generalization of reinforcement across time determines local-level resurgence.

Discussion

This study revealed within-session resurgence of operant responding in a modified free-operant psychophysical procedure—the FOPP. Resurgence procedures typically arrange changes in the target and alternative contingencies followed by a test for resurgence in extinction, across three successive phases that all include multiple sessions (e.g., Lieving & Lattal, 2003; Podlesnik & Kelley, 2014; Winterbauer & Bouton, 2010). Our study assessed resurgence under novel conditions by reversing reinforcement and extinction contingencies between target and alternative responses within sessions during each baseline trial. We observed a resurgence of target responding when exposing alternative responding to a prolonged period of extinction. Additionally, the extent of resurgence tended to be greater, and/or the onset earlier, when extinction of the alternative response was signaled by a discrete 5-s stimulus change (signal). These findings were described well by a quantitative model that assumes that resurgence occurs because of generalization of the response–reinforcer and stimulus–reinforcer relations from previously experienced contexts. Overall, our results generally replicate those of typical resurgence procedures, but on a relatively brief time scale, and under conditions where the stimulus context associated with target and alternative reinforcers is time elapsed since a marker event. Therefore, our findings introduce a novel method for assessing the processes underlying resurgence under more dynamic conditions than has been examined previously. The ability of the model to account for data underscores the importance of stimulus control in resurgence (see also Winterbauer & Bouton, 2010).

The finding of within-session resurgence is not without precedent. Lieving and Lattal (2003) found resurgence within experimental sessions when reinforcement for the alternative response was reduced to a leaner schedule (see also Winterbauer & Bouton, 2012). Three pigeons were initially trained to keypeck as the target response on a VI 30-s schedule in Phase 1. In Phase 2, they trained a treadle press as the alternative response on a VI 30-s schedule while extinguishing target keypecking. In Phase 3, the pigeons were then transitioned to a leaner VI 360-s schedule for the alternative treadle-pressing. The authors found resurgence of target keypecking at low levels during longer interreinforcer intervals of the VI 360-s schedule, which effectively served as local periods of extinction (see Lattal & St. Peter Pipkin, 2009). Along with our study, these findings suggest that resurgence is a function of time without alternative reinforcement, even on relatively brief time scales.

The larger and/or earlier onset of resurgence in the signaled than unsignaled probes suggests an important role of discrimination in resurgence. Specifically, a discrete 5-s stimulus change signaling extinction of the alternative response tended to produce a greater and/or earlier increase in target responding than no signal (see Fig. 3). These findings are consistent with other studies arranging more typical resurgence procedures that have changed the color or presence of stimuli in the transition from Phase 2 to Phase 3 (Kincaid et al., 2015; Podlesnik & Kelley, 2014; but see Sweeney & Shahan, 2015). Overall, these and previous findings suggest that discriminating the initiation of the extinction contingency on the alternative response can influence the size and pattern of resurgence.

The role of discrimination in resurgence corresponds with Winterbauer and Bouton’s (2010) interpretation of resurgence—the transition from the reinforcement of alternative responding in Phase 2 to extinction in Phase 3 constitutes a change in stimulus context (see also Bouton et al., 2012). Thus, Winterbauer and Bouton describe resurgence as an instance of ABC context renewal, which is due to greater generalization of responding from contexts associated with reinforcement than extinction. They suggest the training contingencies in Phase 1 comprise a Context A and reinforcers from the alternative response establish a different stimulus context in Phase 2 (Context B). Extinction of alternative responding in Phase 3 arranges yet a different set of contingencies, comprising another novel context (C).

Similarly, in a renewal preparation, Todd, Winterbauer, and Bouton (2012) arranged an ABC renewal paradigm where responding was initially established in Context A with one set of global environmental features (e.g., scent, textures, visual patterns). They extinguished responding in a second and novel Context B. Finally, transitioning to yet another novel Context C while extinction remained in effect produced an increase in responding (i.e., renewal). Relevant to our study, Todd et al. found greater increases in responding in Context C when Context B was more distinct from Context C. Although ABC renewal tends to be smaller than ABA renewal (e.g., Bouton et al., 2011; but see Todd, 2013), renewal occurred to a level comparable to that of returning the subjects to the original training Context A (i.e., ABA renewal) when Context B was not explicitly made more distinct. These data are in accord with the results of the present study, where resurgence tended to be more evident following more discriminable transitions from reinforcement to extinction of alternative responding (i.e., signaled vs. unsignaled probes). Consistent with Bouton and colleagues’ ideas, the discrete 5-s signal accompanying the transition to extinction of alternative responding in the present experiment functioned to enhance the contextual change, thereby increasing resurgence of target responding.

During extended probe trials, alternative responding generally occurred at a greater rate than target responding. This pattern may be due to the particular requirements of the FOPP. Specifically, in baseline FOPP trials, time since trial onset necessarily served as a discriminative stimulus signaling the active key (see also Bizo & White, 1994, 1995; Cowie, Bizo, & White, 2016; Cowie, Elliffe, & Davison, 2013). Because target responding was reinforced during the initial interval, and alternative responding was reinforced at a later interval, the greater levels of alternative than target responding during extended probe trials may have been due to the subjects generalizing responding along the dimension of time. Extended probe trials introduced an even longer interval, more similar to that wherein alternative responding was reinforced. Indeed, the proposed model yielded larger generalization (G) parameters for alternative than for target responses. Additionally, alternative responding during later parts of probe trials may have been enhanced by the fact that estimates of time become increasingly inaccurate over longer periods of time (e.g., Gibbon, 1977). If such a generalization process occurred along the dimension of time, it necessarily co-occurred with extinction processes on the more recently reinforced alternative response.

The cyclical pattern of target and alternative responding observed after 50 s in signaled probes suggests that the signal may have served to “restart” the trials, similar to the function of keylight illumination at the end of the 10-s ITI. However, a similar pattern was also observed for some pigeons in unsignaled probes, albeit it to a lesser extent, suggesting that the cyclical pattern is not solely due to the discrete 5-s signal. Because events in the FOPP occur with regularity across time, time elapsed since the most recent keylight illumination may also signal the end of one trial, and the probable start of another. Thus, a new trial may be discriminated to have begun even in the absence of keylight illumination. In this case, responding will come under control of the discriminated contingencies. Hence, relapse of responding may have occurred because the contingencies in a standard trial generalized to later times in a probe trial. A quantitative model making this assumption (see Equation 3) provided a reasonable description of rates of responding in probe trials (see Fig. 4).

If resurgence is the result of a generalization of a context experienced previously by the animal to the current context, then its immediacy will be influenced by the occurrence of exteroceptive stimulus changes within a probe trial. Discrete stimulus changes such as those arranged in signaled probes will function as marker events, and thus the passing of the duration of a standard trial might be more readily discriminated. Certainly, we found earlier resurgence in signaled probes than in unsignaled ones. The extent to which responding resurges will depend on the similarity between this marker event and the one usually associated with the start of a trial. In this experiment, trials began with the illumination of both keylights, whereas the stimulus change at 50 s in a signaled probe was a change in the color of the keys. Because this stimulus change differs from the stimulus change typically associated with the beginning of a trial (keylight illumination), we might predict less generalization of standard-trial contingencies in signaled probes than in unsignaled probes, at least for contingencies that occur in close temporal proximity to the onset of the trial. Consistent with this was the smaller generalization (G) parameter values for target responding in signaled relative to unsignaled probes.

A second possible explanation for the cyclic pattern of responding in probe trials could be related to findings revealing periodic response rates entrained by single fixed-interval (FI) schedules (e.g., Crystal & Baramidze, 2007; Machado & Cevik, 1998; Monterio & Machado, 2009). For example, after establishing regular pause-peck response patterns in pigeons using discrete FI trials, Monterio and Machado found that responding during extinction periods oscillated in the same pause-peck pattern as observed in reinforced trials. The regular shifts in the reinforcer location in the FOPP could potentially entrain a periodic alternating pattern of responding, similar to periodic response patterns entrained by periodic (i.e., FI) reinforcers. Both phenomena could be explained by either oscillating timing processes or discriminative stimulus control by elapsed time, and an empirically informed discussion of these two accounts awaits further investigation. Nevertheless, oscillating patterns of responding could themselves be the result of stimulus control by elapsed time.

A number of previous studies have demonstrated resurgence of different patterns of responding, rather than discrete responses (see Bachá-Méndez, Reid, & Mendoza-Soylovna, 2007, for a related discussion). In our experiment, the subjects learned first to peck the target before pecking the alternative key. The complementary patterns of target and alternative responding during prolonged periods of extinction in probe trials could be interpreted as a resurgence of the response sequence established during baseline. This corresponds with Reed and Morgan (2006), who demonstrated resurgence when operant (reinforced) responses were defined by sequences of three-lever presses. Previously reinforced sequences resurged when extinguishing a later-reinforced sequence. Additionally, Cançado and Lattal (2011) demonstrated resurgence of a temporally defined operant. In their experiment, pigeons were trained to emit a positively accelerated pattern of responding, before responses were eliminated with a differential reinforcement of other behavior (DRO) schedule. When reinforcers were removed from the DRO schedule, responding resurged in the same positively accelerated pattern. Therefore, in line with previous research, our data may be interpreted in terms of a resurgence of entire response patterns rather than individual responses.

The stimulus-control model proposed in this article highlights the importance of discriminating contingencies across time and location, thereby suggesting context plays a key role in resurgence of target behavior. Specifically, it predicts that target behavior resurges when the current context is similar to one in the organism’s learning history in which target behavior was sometimes followed by a reinforcer. Such an approach is generally consistent with a contextual model of relapse (Bouton, 2004; Bouton et al., 2012), which has been described conceptually, but not quantitatively (see Craig & Shahan, 2016; McConell & Miller, 2014; Podlesnik & Kelley, 2014; Podlesnik & Sanabria, 2011, for related critiques). This model represents a first approximation to quantify the extent to which stimulus-control processes (discrimination and generalization) affect resurgence.

This model emphasizes the role of stimulus control differently from other quantitative models of relapse. For example, Shahan and Sweeney’s (2011) model based on behavioral momentum theory suggests discriminating the removal of alternative reinforcers removes a source of disruption of target responding, thereby producing resurgence. Similarly, differences in the onset of resurgence in signaled and unsignaled probes in the present experiment suggest the importance of discriminating extinction of the alternative response. However, simply discriminating the onset of extinction of alternative responding cannot account for the cyclical pattern of responding observed in probe trials. Indeed, this pattern of responding is better explained in terms of control by the discriminated context—what events accompany the onset of extinction and what they say about the likely availability of reinforcers for different responses across time.

In conclusion, this study suggests the processes underlying resurgence can operate in relatively brief time scales. It replicates findings of greater resurgence following a more discriminable transition between Phases 2 and 3. Furthermore, the tendency for greater and/or earlier resurgence following signaled versus unsignaled transitions suggests that discrimination of extinction of alternative responding influences the size and onset of resurgence. Data were well described by a quantitative model (see Equation 3) that assumes that resurgence is the result of generalization between contingencies in a previously experienced context and the current context. In other words, this study could suggest context includes both exteroceptive and interoceptive (i.e., temporal) stimuli. Finally, these findings introduce a novel method for assessing the processes underlying resurgence under dynamic conditions.

References

Akaike, H. (1973). Information theory and an extension of the maximum likelihood principle. In B. N. Petrov & F. Csaki (Eds.), Second international symposium on information theory (pp. 267–281). Budapest: Academiai Kiado.

Bachá-Méndez, G., Reid, A. K., & Mendoza-Soylovna, A. (2007). Resurgence of integrated behavioral units. Journal of the Experimental Analysis of Behavior, 87, 5–24.

Bizo, L. A., & White, K. G. (1994). The behavioral theory of timing: Reinforcer rate determines pacemaker rate. Journal of the Experimental Analysis of Behavior, 61, 19–33.

Bizo, L. A., & White, K. G. (1995). Biasing the pacemaker in the behavioral theory of timing. Journal of the Experimental Analysis of Behavior, 64, 225–235.

Bouton, M. E. (2004). Context and behavioral processes in extinction. Learning & Memory, 11, 485–494.

Bouton, M. E., & King, D. A. (1983). Contextual control of the extinction of conditioned fear: Tests for the associative value of the context. Journal of Experimental Psychology: Animal Behavior Processes, 9, 248–265.

Bouton, M. E., Todd, T. P., Vurbic, D., & Winterbauer, N. E. (2011). Renewal after the extinction of free operant behavior. Learning & Behavior, 39, 57–67.

Bouton, M. E., Winterbauer, N. E., & Todd, T. P. (2012). Relapse processes after the extinction of instrumental learning: Renewal, resurgence, and reacquisition. Behavioural Processes, 90, 130–141.

Brown, P. L., & Jenkins, H. M. (1968). Auto-shaping of the pigeon key-peck. Journal of the Experimental Analysis of Behavior, 11, 1–8.

Burnham, K. P., & Anderson, D. R. (1992). Data-based selection of an appropriate biological model: The key to modern data analysis. In Wildlife 2001: Populations (pp. 16–30). Netherlands: Springer.

Cançado, C. R., & Lattal, K. A. (2011). Resurgence of temporal patterns of responding. Journal of the Experimental Analysis of Behavior, 95, 271–287.

Chiang, T. J., Al-Ruwaitea, A. S. A., Ho, M. Y., Bradshaw, C. M., & Szabadi, E. (1998). The influence of switching on the psychometric function in the free-operant psychophysical procedure. Behavioural Processes, 44, 197–209.

Cowie, S., Bizo, L. A., & White, K. G. (2016). Reinforcers affect timing in the free-operant psychophysical procedure. Leaning and Motivation, 53, 24–35.

Cowie, S., & Davison, M. (2016). Control by reinforcers across time and space: A review of recent choice research. Journal of the Experimental Analysis of Behavior, 105, 246–269.

Cowie, S., Davison, M., Blumhardt, L., & Elliffe, D. (2016). Does overall reinforcer rate affect discrimination of time-based contingencies? Journal of the Experimental Analysis of Behavior, 105(3), 393–408. doi:10.1002/jeab.204

Cowie, S., Davison, M., & Elliffe, D. (2014). A model for food and stimulus changes that signal time‐based contingency changes. Journal of the Experimental Analysis of Behavior, 102, 289–310.

Cowie, S., Davison, M., & Elliffe, D. (2016). A model for discriminating reinforcers in time and space. Behavioural Processes, 127, 62–73.

Cowie, S., Elliffe, D., & Davison, M. (2013). Concurrent schedules: Discriminating reinforcer‐ratio reversals at a fixed time after the previous reinforcer. Journal of the Experimental Analysis of Behavior, 100, 117–134.

Craig, A. R., & Shahan, T. A. (2016). Behavioral momentum theory fails to account for the effects of reinforcement rate on resurgence. Journal of the Experimental Analysis of Behavior, 105, 375–392.

Crystal, J. D., & Baramidze, G. T. (2007). Endogenous oscillations in short-interval timing. Behavioural Processes, 74, 152–158.

da Silva, S. P., Cançado, C. R., & Lattal, K. A. (2014). Resurgence in Siamese fighting fish, Betta splendens. Behavioural Processes, 103, 315–319.

da Silva, S. P., & Lattal, K. A. (2006). Contextual determinants of temporal control: Behavioral contrast in a free-operant psychophysical procedure. Behavioural Processes, 71, 157–163.

Davison, M., Cowie, S., & Elliffe, D. (2013). On the joint control of preference by time and reinforcer-ratio variation. Behavioural Processes, 95, 100–112.

Davison, M., & Jenkins, P. E. (1985). Stimulus discriminability, contingency discriminability, and schedule performance. Animal Learning & Behavior, 13, 77–84.

Davison, M., & Nevin, J. A. (1999). Stimuli, reinforcers, and behavior: An integration. Journal of the Experimental Analysis of Behavior, 71, 439–482.

Epstein, R. (1985). Extinction-induced resurgence: Preliminary investigations and possible applications. The Psychological Record, 35, 143–153.

Flesher, M., & Hoffman, H. S. (1962). A progression for generating variable-interval schedules. Journal of the Experimental Analysis of Behavior, 5, 529–530.

Gibbon, J. (1977). Scalar expectancy theory and Weber’s law in animal timing. Psychological Review, 84, 279–325.

Higgins, S. T., Heil, S. H., & Sigmon, S. C. (2013). Voucher-based contingency management in the treatment of substance use disorders. In G. J. Madden (Ed.), Handbook of behavior analysis: Volume 2. Washington DC: American Psychological Association.

Kincaid, S. L., Lattal, K. A., & Spence, J. (2015). Super-resurgence: ABA renewal increases resurgence. Behavioural Processes, 115, 70–73.

Kuroda, T., Cançado, C. R., & Podlesnik, C. A. (2016). Resistance to change and resurgence in humans engaging in a computer task. Behavioural Processes, 125, 1–5.

Lattal, K. A., & St. Peter Pipkin, C. (2009). Resurgence of previously reinforced responding: Research and application. The Behavior Analyst Today, 10, 254–265.

Leitenberg, H., Rawson, R. A., & Mulick, J. A. (1975). Extinction and reinforcement of alternative behavior. Journal of Comparative and Physiological Psychology, 88, 640–652.

Lieving, G. A., & Lattal, K. A. (2003). Recency, repeatability, and reinforcer retrenchment: An experimental analysis of resurgence. Journal of the Experimental Analysis of Behavior, 80, 217–233.

Mace, F. C., & Critchfield, T. S. (2010). Translational research in behavior analysis: Historical traditions and imperative for the future. Journal of the Experimental Analysis of Behavior, 93, 293–312.

Machado, A., & Cevik, M. (1998). Acquisition and extinction under periodic reinforcement. Behavioural Processes, 44, 237–262.

McConell, B. L., & Miller, R. R. (2014). Associative accounts of recovery-from-extinction effects. Learning and Motivation, 46, 1–15.

Monterio, T., & Machado, A. (2009). Oscillations following periodic reinforcement. Behavioural Processes, 81, 170–188.

Navakatikyan, M. A. (2007). A model for residence time in concurrent variable interval performance. Journal of the Experimental Analysis of Behavior, 87, 121–141.

Navakatikyan, M. A., Murrell, P., Bensemann, J., Davison, M., & Elliffe, D. (2013). Law of effect models and choice between many alternatives. Journal of the Experimental Analysis of Behavior, 100, 222–256.

Nevin, J. A., & Wacker, D. P. (2013). Response strength and persistence. In G. J. Madden (Ed.), Handbook of behavior analysis: volume 2. Washington DC: American Psychological Association.

Petscher, E. S., Rey, C., & Bailey, J. S. (2009). A review of empirical support for differential reinforcement of alternative behaviour. Research in Developmental Disabilities, 30, 409–425.

Podlesnik, C. A., & DeLeon, I. G. (2015). Behavioral momentum theory: Understanding persistence and improving treatment. In Autism service delivery (pp. 327–351). New York: Springer.

Podlesnik, C. A., Jimenez-Gomez, C., & Shahan, T. A. (2006). Resurgence of alcohol seeking produced by discontinuing non-drug reinforcement as an animal model of drug relapse. Behavioural Pharmacology, 17, 369–374.

Podlesnik, C. A., & Kelley, M. E. (2014). Resurgence: Response competition, stimulus control, and reinforcer control. Journal of the Experimental Analysis of Behavior, 102, 231–240.

Podlesnik, C. A., & Sanabria, F. (2011). Repeated extinction and reversal learning of an approach response supports an arousal-mediated learning model. Behavioural Processes, 87, 125–134.

Podlesnik, C. A., & Shahan, T. A. (2009). Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior, 37, 357–364.

Podlesnik, C. A., & Shahan, T. A. (2010). Extinction, relapse, and behavioral momentum. Behavioural Processes, 84, 400–411.

Pritchard, D., Hoerger, M., Mace, F. C., Penney, H., & Harris, B. (2014). Clinical translation of animal models of treatment relapse. Journal of the Experimental Analysis of Behavior, 101, 442–449.

Reed, P., & Morgan, T. A. (2006). Resurgence of response sequences during extinction in rats shows a primacy effect. Journal of the Experimental Analysis of Behavior, 86, 307–315.

Shahan, T. A., & Sweeney, M. M. (2011). A model of resurgence based on behavioral momentum theory. Journal of the Experimental Analysis of Behavior, 95, 91–108.

Spradlin, J. E., Fixsen, D. L., & Girarbeau, F. L. (1969). Reinstatement of an operant response by the delivery of reinforcement during extinction. Journal of Experimental Child Psychology, 7, 96–100.

Stubbs, D. A. (1980). Temporal discrimination and a free-operant psychophysical procedure. Journal of the Experimental Analysis of Behavior, 33, 167–185.

Sweeney, M. M., & Shahan, T. A. (2015). Renewal, resurgence, and alternative reinforcement context. Behavioural Processes, 116, 43–49.

Todd, T. P. (2013). Mechanisms of renewal after the extinction of instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes, 39, 193–207.

Todd, T. P., Winterbauer, N. E., & Bouton, M. E. (2012). Effects of the amount of acquisition and contextual generalization on the renewal of instrumental behavior after extinction. Learning & Behavior, 40, 145–157.

Volkert, V. M., Lerman, D. C., Call, N. A., & Trosclair-Lasserre, N. (2009). An evaluation of resurgence during treatment with functional communication training. Journal of Applied Behavior Analysis, 42, 145–160.

Wacker, D. P., Harding, J. W., Berg, W. K., Lee, J. F., Schieltz, K. M., Padilla, Y. C., Nevin, J. A., & Shahan, T. A. (2011). An evaluation of persistence of treatment effects during long-term treatment of destructive behavior. Journal of the Experimental Analysis of Behavior, 96, 261–282.

Winterbauer, N. E., & Bouton, M. E. (2010). Mechanisms of resurgence of an extinguished instrumental behavior. Journal of Experimental Psychology: Animal Behavior Processes, 36, 343–353.

Winterbauer, N. E., & Bouton, M. E. (2012). Thinning the rate at which the alternative behavior is reinforced on resurgence of an extinguished instrumental response. Journal of Experimental Psychology: Animal Behavior Processes, 38, 279–291.

Acknowledgments

We thank the members of the Operant Behaviour Laboratory and the students from the PSYCH 309 class of 2014 for their help in running the experiment, and Michael Owens who looked after the pigeons. We also extend our many, infinite thanks to Michael Davison, for his germane, insightful, and terribly useful comments on an earlier draft of the manuscript. The research was conducted under Approval RT909 granted by The University of Auckland Animal Ethics Committee. Reprints may be obtained from John Bai.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bai, J.Y.H., Cowie, S. & Podlesnik, C.A. Quantitative analysis of local-level resurgence. Learn Behav 45, 76–88 (2017). https://doi.org/10.3758/s13420-016-0242-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-016-0242-1